eISSN: 2093-8462 http://jesk.or.kr

Open Access, Peer-reviewed

eISSN: 2093-8462 http://jesk.or.kr

Open Access, Peer-reviewed

Yulim Kim

, Hyo Chang Kim

, Min Chul Cha

10.5143/JESK.2023.42.6.539 Epub 2024 January 05

Abstract

Objective: This study aimed to identify the optimal point of switching between voice and touch modalities for modality selection, regarding the number of characters and hierarchy-based menu structures.

Background: Technological advances have enabled the development of multimodal interaction in modern smart devices. Selecting the most adequate modality during interaction with devices is crucial since the interface is regarded as a significant element that can enhance usability and overall satisfaction.

Method: To achieve the research objective, the experiment was carried out by developing a modality selection task program through JavaScript. The experiment included three distinct tasks: Text entry, Simple keyboard touch, and Hierarchy menu. During the modality selection task, participants were given the option to select the more efficient modality between voice and touch modalities based on their preference.

Results: The Text entry task evaluated the point of switching modalities based on the number of characters, but no significant difference was found in the probability of voice usage between the number of characters. Therefore, the syllable per touch ratio was adopted as an alternative measure in the Simple keyboard touch and Hierarchy menu tasks. A significant difference was found in the predicted probability of voice usage between the syllable per touch, identifying the optimal point of switching modalities, reaching up to two and five syllables per touch, respectively.

Conclusion: The results indicate that the point at which users switch between voice and touch modalities depends on the menu structure, and the syllable per touch ratio is a critical factor to consider in modality selection.

Application: The findings of this study provide significant insights for developers aiming to design multimodal interactions for various menu structures.

Keywords

Smart devices Multimodal interaction Voice user interface (VUI) Touch-based interface Modality selection User experience

These days, smart devices are widely encountered in various places, and people interact with them through multiple interfaces. Traditionally, touch-based interaction has been the primary mode of modality (Almeida and Alves, 2017; ULUDAĞLI and Acartürk, 2018). However, technological advances have given rise to various other modalities, resulting in the development of modern smart devices and applications based on multimodal interaction (Mohamad Yahya Fekri and Ajune Wanis, 2019). Along with touch-based interfaces, the development of advanced speech recognition technology has enabled the usage of Voice User Interface (VUI), which is a widely applicable feature on smart devices (Nguyen et al., 2019). VUI has allowed users to interact with their devices using voice input, providing a convenient and hands-free alternative to traditional touch-based interfaces (Song et al., 2022). Popular virtual assistants providing voice interfaces like Siri or Amazon Alexa enable users to ask questions, play music, and manage smart home devices by simply speaking to their devices (Parnell et al., 2022).

Multimodal interface is considered important in that adequate usage from the combination of input modalities can increase usability and enhance overall satisfaction (Naumann et al., 2010). Thus, selecting the most suitable modality while interacting with devices is essential. With the emergence of VUI, the range of selection of interface modalities that users can make for performing tasks has been broadened, and due to its various advantages, voice-based technologies are showing rapid growth. The global smart speaker market is expected to experience a growth rate of 21%, resulting in 163 million sales in 2021 (Heater, 2020; Moore and Urakami, 2022). However, despite the continuous development of voice-based technologies, the usage frequency of these technologies is occurring in a slow pace. In the case of adopting VUI in In-Vehicle Infotainment (IVI) system, despite the advantages of interacting through voice input, many users still prefer to use the conventional touch-based interface due to their unsatisfactory interaction regarding voice assistants (Kessler and Chen, 2015; Kim and Lee, 2016). As such it becomes evident that VUI offers conveniences like straightforward usage and hands-free interactions. Nevertheless, there exists a gradual uptake trend in the adoption of VUI. Consequently, this study aims to conduct a comparative analysis between the widely utilized touch modality, which users commonly interact with, and voice modality, which can demonstrate its advantages when appropriately utilized.

The objective of this research is to investigate the factors that influence users' choice between using voice input and touch input in a multimodal interaction system under different menu structures, with a focus on the number of characters. The study aims to identify the point at which users feel more convenient and prefer to use VUI, especially in the context of hierarchy-based menu structures. To achieve this goal, the study plans to conduct three tasks. Firstly, it will investigate the number of characters at which users prefer to switch from touch input to voice input in a non-hierarchy environment. Secondly, the study will explore the syllable per touch ratio to find the point of switching between input modalities in a non-hierarchy environment. Finally, it will examine the syllable per touch ratio in a hierarchy environment to identify the point at which users prefer to switch between input modalities.

This study makes a valuable contribution to the field of multimodal interaction by investigating the optimal point at which users prefer to switch between different input modalities, in relation to the number of characters and hierarchy-based menu structures. A unique approach was taken by examining the point of switching between voice and touch modalities within a menu structure. The results of this study can improve the overall usability and efficiency of multimodal interaction, which can enhance the user experience. This is particularly important given the growing use of multimodal interaction systems in various settings. Additionally, the practical implications of these findings are significant, as they can inform the development of more user-friendly and efficient multimodal interaction systems in the future.

1.1 Modality selection

Modality selection refers to the process of choosing between different interaction modalities (Atrey et al., 2010). Providing users with control over modality selection allows them to use the least error-prone modality and switch to alternative modalities when needed (Reeves et al., 2004). The adoption of multimodal interaction offers options for users the flexibility to select the input modality that aligns best with their needs and capabilities (Naumann et al., 2010). The decision-making process behind users selecting one modality over another is a complex one. Despite the potential for enhanced perceived quality with multimodal interaction, users tend to adhere to a single input modality rather than utilizing multimodality (Wechsung, 2014). As indicated by Schaffer et al. (2011), a significant role in modality selection is played by efficiency and effectiveness. Moreover, it is noteworthy that the number of required interaction steps heavily impacts modality selection (Wechsung et al., 2010). Hence it is deemed necessary to conduct research into how modality selection occurs based on different levels of interaction.

1.2 Menu structure

As devices have advanced and diversified in the functions they offer to users, research has been conducted on various menu structures, which can differ based on the ways each function is executed. Menus, as part of the selection process, appear when users intend to use specific functions, leading to extensive research on menu structures. Menu structures can be broadly categorized into two types (Snowberry et al., 1983; Miller, 1981): Non-hierarchy menu structure and Hierarchy menu structure. Non-hierarchy menu structure (Christie et al., 2004) is composed of a single layer and provides all options at once. They offer users a wide array of choices to find the appropriate option and present the complete set of individual options simultaneously. Hierarchy menu structure (Billingsley, 1982) consists of sequentially presented menus, with the most general descriptions at the top of the hierarchy, becoming more specific as users navigate downward. At the lowest level, users can select their desired goals.

Accordingly, when using touch input to access specific device functions, the hierarchical manner in which menu structures are organized can influence the interaction process. With voice input, however, users can input information regardless of the hierarchical structure, as it allows for voice-based interaction without constraints. Given that the mode of input could change based on the menu structure from a modality selection perspective when users engage in specific tasks, differences are expected to exist in the process of selecting options through touch and voice input within these two menu structures. Thus, there is a need to explore the differences in the selection of input modalities according to different menu structures.

1.3 Research objective

In this study, the objective is to investigate how the number of characters, syllable per touch, and hierarchy-based menu structures may influence modality selection by identifying the switching point of input modalities.

To achieve this, unlike previous works which typically involve testing modality selection of input modalities under uniform conditions, this study is designed to align with different menu structures encountered during real-world device usage. These structures include Text entry, Simple keyboard touch, and Hierarchy menu. Furthermore, we evaluated how the number of characters and syllable per touch in each menu structure affect the point of switching between touch and voice modalities. The following research question and hypotheses are formulated to be tested in a laboratory experiment.

RQ: How will the number of characters, syllable per touch, and hierarchy-based menu structures influence users’ input choices?

H1-1) As the number of characters increases, the usage of voice input is expected to increase.

H1-2) As the syllable per touch decreases, the usage of voice input is expected to increase.

H1-3) Voice input usage is expected to be higher in hierarchy menu structure compared to non-hierarchy menu structure.

2.1 Material

The experiment was conducted by developing a modality selection task program through JavaScript. Three tasks were involved in the experiment: Text entry, Simple keyboard touch, and Hierarchy menu. During the modality selection task, participants could choose between voice and touch modalities depending on their preference.

2.2 Modality selection task

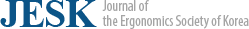

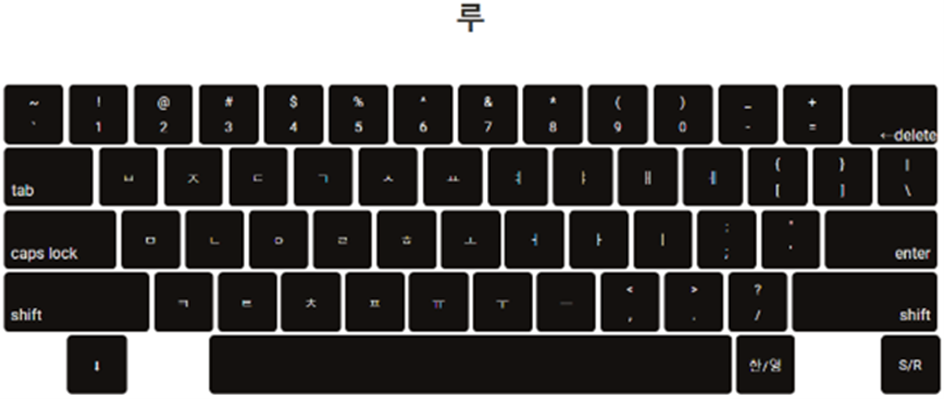

The experiment was constructed for the participants to select based on their perception, the more efficient modality between voice and touch in the modality selection task. Participants were presented with three tasks, including Text entry, Simple keyboard touch, and Hierarchy menu. In the Text entry task, participants were presented with a real-shaped QWERTY keyboard with alphabets to press, mimicking a real-world keyboard typing scenario (Figure 1). The task was designed since it represents a general text entry input layout that most users encounter when entering text on a device to execute a specific function. In the Simple keyboard touch task, participants were presented with a real-shaped QWERTY keyboard without alphabets and were instructed to press the green buttons that appeared, using a method similar to selecting only the first consonant letter of a character (Figure 2a). This task was arranged to use the same keyboard format as the Text entry task, but is tailored to represent simple text entry scenarios where users input text using only the first consonant letter of a character, without entering all consonants and vowels for input. Finally, in the Hierarchy menu task, participants were shown a 3 × 3 matrix design featuring nine words with a search target to press, resembling navigational sections on websites (Figure 2b). The task was structured to reflect a sequential menu, which simplifies function discovery by displaying basic categories on the initial screen and requiring users to navigate through subsequent screens for specific sub-functions. The Text entry and Simple keyboard touch tasks presented non-hierarchical environments, while the Hierarchy menu task featured a hierarchical environment. The aim of this study was to compare the efficiency of voice and touch modalities in these distinct task environments.

2.3 Analysis

In the Text entry task, the number of characters was analyzed as the independent variable. Since entering a single character requires inputting both consonants and vowels through touch, the number of touches always exceeds the number of characters. Thus, in this case, the analysis was based on the number of characters rather than the syllable per touch ratio. The syllable per touch ratio is a measurement that represents the number of syllables that need voice input for making a single touch input. For the Simple keyboard touch task, although it shares the same non-hierarchy menu condition as the Text entry task, it limits the number of touches exceeding the number of characters by using only the first consonant letter of a character. Therefore, to analyze it from a proportional perspective, the task employed the syllable per touch ratio as the independent variable. Similarly, for the Hierarchy menu task, the number of touches exceeding the number of characters was limited, thus the syllable per touch ratio was adopted as the independent variable. Consequently, this study sought to analyze the Simple keyboard touch task and Hierarchy menu task on the same basis using the syllable per touch ratio, comparing them within the context of non-hierarchy menu and hierarchy menu structure.

2.4 Participants

A total of eleven participants (5 females and 6 males) attended the experiment, all from Yonsei University in Seoul, South Korea. They were all Koreans, with ages ranging from 25 to 37 years (average age = 30.27 years, SD = 3.74). Given the prevalence of touch input across diverse devices, it was assumed that all participants possessed prior familiarity with touch input. However, due to the relatively limited adoption of VUI, participants were asked regarding their exposure to and experience with VUI. They all had prior experience in VUI, although the frequency of usage varied among participants.

3.1 Text entry (Non-hierarchy)

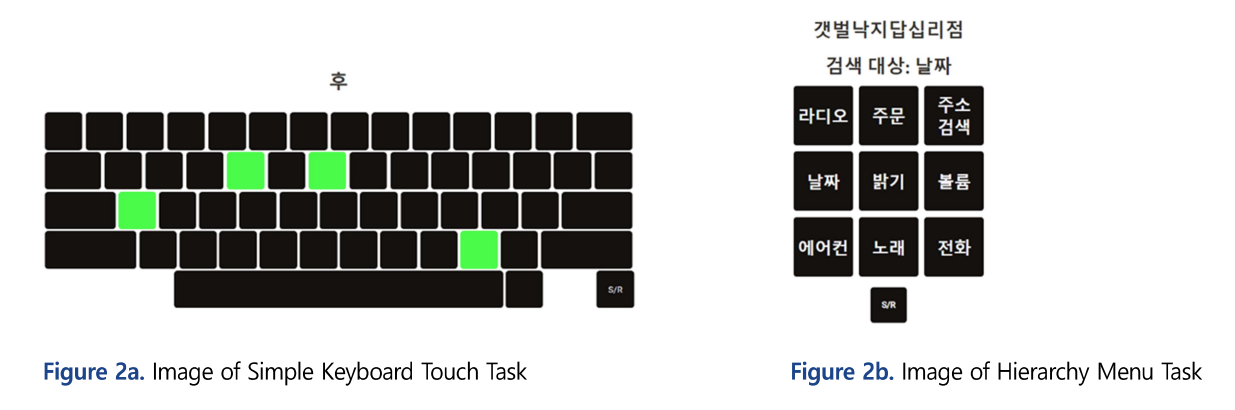

Figure 3 presents a graph based on a descriptive statistical analysis conducted for the Text entry task, illustrating the results of the number of characters affecting the probability of voice usage. The probability of voice usage was determined by converting the frequency of touch and voice input usage into percentages. When this percentage exceeds 50%, it indicates that voice usage surpasses touch usage in frequency.

In the Text entry task, it was found that the use of voice input was much higher in all the number of characters, even for one character. At one character, the probability of using voice modality exceeded 50% and reached nearly 75%. For two to four characters, the probability approached 95%, and lastly, for five characters, it reached 100%. These results indicate that the probability of using voice modality is considerably high regardless of the number of characters.

3.2 Simple keyboard touch (Non-hierarchy), hierarchy menu (Hierarchy)

Figure 4 depicts a graph derived from binomial logistic regression analysis performed on both the Simple keyboard touch and Hierarchy menu tasks, illustrating the results of the syllable per touch affecting the predicted probability of voice usage. The predicted probability of voice usage was determined by analyzing the frequency of touch and voice input usage in percentage terms. When this percentage exceeds 50%, it can be predicted that voice usage will exceed touch usage.

In both the Simple keyboard touch and Hierarchy menu tasks, it was found that a decrease in the syllable per touch resulted in a corresponding increase in the use of voice input. The point of switching between voice and touch modalities, which takes place at the 50% mark, is indicated by using a horizontal line. The use of voice was higher than 50%, meaning a higher predicted probability of using voice modality, until it almost reached up to two syllables per touch in the Simple keyboard touch task and five syllables per touch in the Hierarchy menu task. After each of these points, however, the predicted probability of using touch modality became higher.

The results of the analysis are presented in Table 1. The analysis revealed that the number of touches did not have a statistically significant effect on voice modality usage (p = 0.789). However, the syllable per touch and menu structure were found to have a significant effect at a significance level of 0.05. Specifically, for each increase of one syllable per touch, the odds of voice usage decreased by 0.401 times, having all other independent variables constant (p < 0.001, OR: 0.599, 95% CI: 0.571~0.628). Additionally, the Hierarchy menu was found to increase the odds of voice usage by 5.451 times compared to the Simple keyboard touch, having all other independent variables constant (p < 0.001, 95% CI: 4.422~6.720).

|

IV |

B |

SE |

Wald |

p |

OR |

95% CI |

||

|

Lower |

Upper |

|||||||

|

Syllable per touch |

-0.513 |

0.024 |

440.092 |

.000*** |

0.599 |

0.571 |

0.628 |

|

|

Menu structurea |

Hierarchy menu |

1.696 |

0.107 |

252.426 |

.000*** |

5.451 |

4.422 |

6.720 |

|

Number of touches |

- |

- |

- |

.789 |

- |

- |

- |

|

|

***p<.001 |

||||||||

This study has revealed that modality selection between voice and touch varies depending on the menu structure. Specifically, in the Text entry task, an attempt was made to investigate whether there were significant differences in the probability of using voice and touch input as the number of characters increased. However, an overwhelmingly high proportion of voice input was observed across all the number of characters. This finding implies that in situations such as typing words on a keyboard, regardless of the number of characters, individuals have a preference for voice input over touch input. This could be because typing a single Korean syllable on a keyboard typically requires an average of 2.57 touches, which is more time-consuming than using voice input. The result aligns with prior research that supports the higher usability of voice input compared to touch input in text entry tasks (Chaparro et al., 2015). This also appears to be consistent with findings from studies indicating the advantages of voice input over keyboard input, particularly for longer inputs (Wechsung et al., 2010). Therefore, to investigate the optimal point of switching modalities between voice and touch, fewer than one character is needed, but this is practically unfeasible. Thus, the task had to be manipulated. To solve this issue, the Simple keyboard touch task was executed, in which text entries were replaced with simple touches. Moreover, as the Text entry task result showed no significant difference between the number of characters, the syllable per touch ratio was adopted.

In the Simple keyboard touch task, the syllable per touch ratio was used to examine how the proportion of voice and touch modalities varies. The task was designed to have the same or fewer number of touches than the number of characters to avoid the high probability of voice input usage seen in the Text entry task, which required a higher number of touches than the number of characters. The Hierarchy menu task also followed a similar approach as the Simple keyboard touch task, but differed in its menu structure, which was intentionally designed in a hierarchical manner.

The binomial logistic regression analysis of both tasks revealed that the number of touches was not a significant factor, and only the syllable per touch ratio had a significant impact on the predicted probability of voice usage. The predicted probability of using voice input was high until approximately two syllables per touch in the Simple keyboard touch task and five syllables per touch in the Hierarchy menu task, at which point the predicted probability of using voice input reached 50%. These findings can be attributed to the fact that, even at this point, despite the need for greater use of voice input over touch input, the preference for voice modality depends on its efficiency (Schaffer et al., 2011). This is because voice input streamlines the process by reducing the number of interaction steps required compared to other input modalities (Schaffer et al., 2011; Wechsung et al., 2010). This supported previous works that indicate the efficiency and preference for voice input relative to text input, as observed in studies making such distinctions (Bilici et al., 2000). However, when the syllable per touch became higher than these thresholds, the predicted probability of using touch input became higher. These results suggest that the optimal point at which users switch between voice and touch modalities can vary depending on the menu structures.

This study has limitations that must be acknowledged. Firstly, the study focused primarily on exploring the optimal point of switching between voice and touch modalities, without considering the task performance. The variables such as error rate and task completion time that could potentially influence modality selection were not examined in this study. Future research could address this limitation by conducting a more comprehensive investigation of the relationship between modality selection and task performance. Additionally, another area for future research involves exploring different types of menu structures beyond the standard QWERTY keyboard layout and 3 × 3 matrix layout used in this study. The findings suggest that the optimal point of switching between modalities varies across different menu structures. Hence, it is important to explore a broader range of menu structures to gain a more comprehensive understanding of their impact on modality selection.

This study investigated switching between voice and touch modalities for different number of characters and hierarchy-based menu structures for modality selection, using three distinct tasks. The Text entry task did not show a significant difference in the number of characters used, while the Simple keyboard touch and Hierarchy menu tasks identified the optimal point of modality selection based on the syllable per touch ratio. The study suggests that the number of characters or the number of touches did not have a significant impact on users' modality selection. Instead, the syllable per touch ratio was found to be a crucial factor that should be considered in the context of modality selection for menu structures. The implications of this study offer valuable insights for developers seeking to design multimodal interactions for different menu structures.

References

1. Almeida, A. and Alves, A., Activity recognition for movement-based interaction in mobile games. Proceedings of the 19th International Conference on Human-Computer Interaction with Mobile Devices and Services (pp. 1-8), 2017. https://doi.org/ 10.1145/3098279.3125443

Google Scholar

2. Atrey, P.K., Hossain, M.A., El Saddik, A. and Kankanhalli, M.S., Multimodal fusion for multimedia analysis: a survey. Multimedia Systems, 16, 345-379, 2010. https://doi.org/10.1007/s00530-010-0182-0

3. Bilici, V., Krahmer, E.J., Te Riele, S. and Veldhuis, R., Preferred modalities in dialogue systems. In Proceedings of the 6th International Conference on Spoken Language Processing (ICSLP), Beijing, China, October 16-20, 2000 (Vol. 2) (pp. 727-730). International Speech Communication Association (ISCA), 2000. https://doi.org/10.21437/icslp.2000-372

Google Scholar

4. Billingsley, P.A., Navigation through hierarchical menu structures: does it help to have a map?. In Proceedings of the Human Factors Society Annual Meeting (Vol. 26, No. 1, pp. 103-107). Sage CA: Los Angeles, CA: SAGE Publications, 1982. https://doi.org/ 10.1177/154193128202600125

5. Chaparro, B.S., He, J., Turner, C. and Turner, K., Is touch-based text input practical for a smartwatch?. In HCI International 2015-Posters' Extended Abstracts: International Conference, HCI International 2015, Los Angeles, CA, USA, August 2-7, 2015. Proceedings, Part II 17 (pp. 3-8). Springer International Publishing, 2015. https://doi.org/10.1007/978-3-319-21383-5_1

Google Scholar

6. Christie, J., Klein, R.M. and Watters, C., A comparison of simple hierarchy and grid metaphors for option layouts on small-size screens. International Journal of Human-Computer Studies, 60(5-6), 564-584, 2004. https://doi.org/10.1016/j.ijhcs.2003.10.003

Google Scholar

7. Heater, B., The smart speaker market is expected to grow 21% next year, TechCrunch, https://techcrunch.com/2020/10/22/the-smart-speaker-market-is-expected-grow-21-next-year/ (retrieved April 19, 2023).

8. Kessler, A.M. and Chen, B.X., Google and Apple Fight for the car dashboard. The New York Times, http://www.nytimes.com/ 2015/02/23/technology/rivals-google-and-apple-fight-for-the-dashboard.html (retrieved April 19, 2023).

9. Kim, D.H. and Lee, H., Effects of user experience on user resistance to change to the voice user interface of an in-vehicle infotainment system: Implications for platform and standards competition. International Journal of Information Management, 36(4), 653-667, 2016. https://doi.org/10.1016/j.ijinfomgt.2016.04.011

10. Miller, D.P., The depth/breadth tradeoff in hierarchical computer menus. In Proceedings of the Human Factors Society Annual Meeting (Vol. 25, No. 1, pp. 296-300). Sage CA: Los Angeles, CA: SAGE Publications, 1981. https://doi.org/10.1177/ 107118138102500179

11. Mohamad Yahya Fekri, A. and Ajune Wanis, I., A review on multimodal interaction in Mixed Reality Environment. IOP Conference Series: Materials Science and Engineering (Vol. 551, No. 1, p. 012049). IOP Publishing, 2019. https://doi.org/10.1088/1757-899X/551/1/012049

12. Moore, B.A. and Urakami, J., The impact of the physical and social embodiment of voice user interfaces on user distraction. International Journal of Human-Computer Studies, 161, 102784, 2022. https://doi.org/10.1016/j.ijhcs.2022.102784

Google Scholar

13. Naumann, A.B., Wechsung, I. and Hurtienne, J., Multimodal interaction: A suitable strategy for including older users?. Interacting with Computers, 22(6), 465-474, 2010. https://doi.org/10.1016/j.intcom.2010.08.005

Google Scholar

14. Nguyen, Q.N., Ta, A. and Prybutok, V., An integrated model of voice-user interface continuance intention: the gender effect. International Journal of Human-Computer Interaction, 35(15), 1362-1377, 2019. https://doi.org/10.1080/10447318.2018.1525023

Google Scholar

15. Parnell, S.I., Klein, S.H. and Gaiser, F., Do we know and do we care? Algorithms and Attitude towards Conversational User Interfaces: Comparing Chatbots and Voice Assistants. Proceedings of the 4th Conference on Conversational User Interfaces (pp. 1-6), 2022. https://doi.org/10.1145/3543829.3544517

Google Scholar

16. Reeves, L.M., Lai, J., Larson, J.A., Oviatt, S., Balaji, T.S., Buisine, S., Collings, P., Cohen, P., Kraal, B., Martin, J.C., McTear, M., Raman, T.V., Stanney, K.M., Su, H. and Wang, Q.Y., Guidelines for multimodal user interface design. Communications of the ACM, 47(1), 57-59, 2004. https://doi.org/10.1145/962081.962106

Google Scholar

17. Schaffer, S., Jöckel, B., Wechsung, I., Schleicher, R. and Möller, S., Modality selection and perceived mental effort in a mobile application. Proceedings of the 12th Annual Conference of the International Speech Communication Association (pp. 2253-2256), 2011. https://doi.org/10.21437/Interspeech.2011-599

18. Snowberry, K., Parkinson, S.R. and Sisson, N., Computer display menus. Ergonomics, 26(7), 699-712, 1983. https://doi.org/10.1080/ 00140138308963390

19. Song, Y., Yang, Y. and Cheng, P., The investigation of adoption of voice-user interface (VUI) in smart home systems among chinese older adults. Sensors, 22(4), 1614, 2022. https://doi.org/10.3390/s22041614

Google Scholar

20. ULUDAĞLI, M.Ç. and Acartürk, C., User interaction in hands-free gaming: A comparative study of gaze-voice and touchscreen interface control. Turkish Journal of Electrical Engineering and Computer Sciences, 26(4), 1967-1976, 2018. https://doi.org/ 10.3906/elk-1710-128

21. Wechsung, I., An evaluation framework for multimodal interaction. T-Labs Series in Telecommunication Services. Cham: Springer International. 2014. https://doi.org/10.1007/978-3-319-03810-0

22. Wechsung, I., Engelbrecht, K.P., Naumann, A., Möller, S., Schaffer, S. and Schleicher, R., Investigating modality selection strategies. In 2010 IEEE Spoken Language Technology Workshop (pp. 31-36). IEEE, 2010. https://doi.org/10.1109/SLT.2010.5700818

PIDS App ServiceClick here!