eISSN: 2093-8462 http://jesk.or.kr

Open Access, Peer-reviewed

eISSN: 2093-8462 http://jesk.or.kr

Open Access, Peer-reviewed

Chang-Geun Oh

10.5143/JESK.2023.42.4.385 Epub 2023 August 30

Abstract

Objective: To discuss the design requirements of digital twin (DT) for urban air mobility (UAM) operation monitoring system according to the findings from the development of a Mixed Reality (MR)-based digital twin (DT) demonstrator.

Background: UAM vehicles will fly within and around urban areas in the near future. Since the UAM vehicles will fly above the urban airspace, proactive awareness of safety-related urban configurations is important. DT is a promising tool to plan, monitor, and assess collision probability with building obstacles and other air vehicles. The MR technology is considered a good means of live 3D graphics for users and an application of DT. Applying the contemporary MR technology to a DT for UAM operations and assessing the DT functions are meant to consider UAM monitoring system design alternatives.

Method: Applying the systems engineering process, the author elicited five design requirements of the DT prototype for the UAM operation monitoring purpose. The author's team conducted indoor drone flights around a building miniature and downloaded the coordinates of drone flight trajectories. The team generated virtual 3D graphics of the drone and miniature in the MR application to simulate UAM flight operations in an urban area. The developed application demonstrated simple UAM flights visualizing a building obstacle, planned/actual/optimal flight paths, and no-fly zones. The A-Star algorithm determined and rapidly updated the recommended optimal flight path while the drone significantly deviated from the planned path. The author assessed the demonstrator design to see if the design met the requirements.

Results: The concept demonstrator satisfied four requirements of five regarding the DT functions for effective interaction with users.

Conclusion: The developed concept demonstrator designs may provide insights into the DT design directions of accurate planning, modeling, simulating, monitoring, and assessing UAM operations.

Application: The MR application will be an effective modeling and simulation tool to plan and monitor UAM operations and assess how the UAM vehicle searches for the optimal path avoiding building obstacles from the third-person view.

Keywords

Urban air mobility Digital twin Mixed reality Virtual reality Optimal flight path A-Star algorithm Concept demonstrator

Urban Air Mobility (UAM) is a brand-new air transportation system to react to the chronic congestion in surface transportation. It is based on the concept that large-size-motor-propelled air drones accommodate human passengers and cargo in and around urban areas (Greenfield, 2019; Federal Aviation Administration, 2020; EASA, 2021). Compared with the conventional air transportation system in which air traffic controllers (ATCers) manage manned airplanes, the UAM targets autonomous flights as the system stabilizes (Greenfield, 2019; Federal Aviation Administration, 2020; EASA, 2021). Since the FAA, NASA, and EASA expect the UAM operation field to be urban and suburban, many UAM vehicles are expected to take off, land, and move inside the area of building clusters in populous cities. After take-off, UAM vehicles will desirably search for the most economical flight path considering their battery life. At the same time, they have to avoid potential collisions against obstacles, including buildings, electrical wires, and other UAM vehicles in the common airspace (Greenfield, 2019; Federal Aviation Administration, 2020; EASA, 2021). The UAM pilots and managers need to proactively be aware of the locations of obstacles and flight routes of other vehicles that overlap with their path to avoid a potential collision. Researchers are investigating UAM flight path development strategies considering pilot in command (PIC) onboard vs. offboard, possible barriers, and ground-based operators (Mathur et al., 2019). The UAM transportation system may need to define strict spatial and temporal trajectories that UAM vehicles should fly along to solve this problem. If UAM vehicles deviate from this defined route in a building cluster area, returning to the original flight path may not be a good idea in many cases because it can cause further delay and battery consumption while detouring from behind unknown buildings. This scenario is possible since the UAM vehicle will not always fly in the open sky. In this case, the UAM vehicle may have to seek other optimal flight paths from the current adverse position to the destination. This situation can occur concurrently with multiple UAM vehicles in the common urban airspace. In this condition, the transportation system needs to rapidly guide the updated optimal path to each UAM vehicle because the PIC's decision-making may be less precise than the A.I. in the condition of limited time for the decision-making. The A.I. may determine the alternative route in the complex environment in time.

According to the concept of operations (ConOps) of UAM infrastructure, the UAM corridors in the urban areas are distinguished from general aviation (GA) aircraft and unmanned aerial vehicles for stable operations (Greenfield, 2019; Federal Aviation Administration, 2020; EASA, 2021). These corridors are the usual flight routes for similar classes of UAM vehicles. Moving in the three-dimensional airspace is different from two-dimensional surface transportation. With notable traffic signs and lights absent, sometimes moving along the designated horizontal/vertical routes will be difficult even in autonomous flight due to external variables such as bad weather or strong wind. According to the ConOps (Greenfield, 2019; Federal Aviation Administration, 2020; EASA, 2021), the PIC will be responsible for monitoring the flight situations and can get involved in manual flight if the vehicle is in danger. Lascara et al. (2019) studied the UAM configuration in the national airspace system (NAS). They remained the following research question (Lascara et al., 2019): what decision support capabilities will be effective for ATC, unmanned aircraft systems traffic management (UTM), and other operators? The human operators inside and outside the UAM vehicle cockpit have sufficient visual information about flight situations to maintain stable flights.

The authority of UAM flights will move from the onboard pilots to the A.I. as the systems become stabilized. In the autonomous flight environment, the UAM traffic managers/pilots and passengers will want to verify if the system ensures flight safety along the routes. The precise and rich spatial and temporal information around the UAM vehicle's autonomous flight routes will satisfy their needs. The solution to this will be a digital twin. Digital Twin (DT) is the virtual content that implements the digital transformation of a physical entity to mimic its spatial properties in real-time interconnection (Singh et al., 2021). Many DTs are being developed for various applications – factory assembly, construction, surface transportation, etc. for safe and effective modeling and simulation (M&S) and operation monitoring. Ma et al. (2019) asserted that a DT could meet the new requirements of machine efficiency and operation safety for advanced manufacturing with a more flexible interaction with machines. There are some important HCI attributes to implement when considering the design of DT for the UAM transportation infrastructure. The next sections introduce the HCI attributes and conducts systems engineering processes (requirement elicitation, system analysis, software development, and the internal test) to demonstrate the objective UAM DT concept. Then it verifies the requirements and discusses the pertinent HCI issues found while developing and evaluating the DT demonstrator for this study. To develop an MR-based UAM DT demonstrator with reliable space-time synchronization between the real and virtual world, this study implemented accurate spatial synchronization between drone/obstacle and 3D MR graphics. It also applied a simple A.I. algorithm to display the 3D optimal flight paths.

2.1 Accuracy of spatial regeneration of the real object

The conventional simulation tool may not be fully interested in duplicating the spatial features and dimensions between the real objects and simulation graphics. Most current DT prototypes are interested in the interaction between the users and DTs, assuming that the DT successfully implements the spatial accuracy of real locations and scales. Lee and Kim (2020) introduced brief DT-based UAM airway design concepts. Planning the UAM flight paths in the DT considering the possibility of a potential collision with obstacles will need accurate spatial duplication of the real world. DT designers may be able to do this by interconnecting spatial coordinate data. To realize the successful autonomous flight through all operational phases, the UAM aircraft should be able to execute their flight operations (i.e., fly at a low altitude among tall buildings and land on vertiports or landing spots) only referring to the DT-not by the PIC's visual in the future.

2.2 Visualization of 3D spatial information and optimal flight path using effective methods

Because UAM operational environment will be urban areas, not the open sky like traditional aviation, a high quality and quantity of 3D representation of UAM operational information is essential. Most DTs are basically configured with 3D graphics in the virtual environment. However, aviation systems have limitedly tested 3D displays for ATC or air traffic management (Bourgois et al., 2005; Tavanti et al., 2003). Most conventional 3D displays depict the 3D graphics on the 2D screens and users rotate the viewing angle to see the 3D features. Mixed reality (MR) technology implements vivid 3D holograms that the 3D graphics on the 2D screen does not show. This feature makes the MR become a good technology scheme for DT. Tu et al. (2021) tested an MR-based DT to apply to a crane monitoring and controlling system. The scale of monitoring the UAM operational environment is much bigger.

2.3 Agile monitoring and updating optimal flight path

The UAM traffic managers'monitoring paradigm will be the identification of collision possibilities with city obstacles and other UAM vehicles. At the same time, the A.I. will dynamically update the recommended optimal path and the traffic managers/pilots will need to verify if the recommended optimal flight routes are safe to fly in. If the DT fails to identify a certain obstacle that exists in the flight routes or there is a latency (i.e., the time delay because the volume of data packet for transmission is bigger than the system capacity) between the real situation and the DT representation, the traffic managers'/pilots' decision-making will be unreliable. Even in conventional aviation, the latency between real weather situations and the vision of the weather display in the cockpit has been a critical safety issue (Caldwell et al., 2015). Recent information and communication technology (ICT) has been solving this time delay problem by implementing high-speed mobile internet and advanced network technologies.

2.4 Quick and accurate depth perception for 3D map graphics

Humans can perceive the depth in pictures or photos on 2D screens or canvases processing in the visual cortex (Welchman, 2016). However, this depth perception is vulnerable to committing errors (Welchman, 2016). Humans can perceive depth in other ways: stereopsis (i.e., depth perception recovering binocular disparities) and accommodation (i.e., controlling lenses' refractive power to focus on near vs. far objects) with natural environments. Humans may perceive the stereopsis and accommodation with computer-generated stereoscopic holograms (Barabas and Bove, 2013). From this respect, the 3D holographic view using MR devices will provide the effectiveness of higher situation awareness.

2.5 Successful warning for the safety-critical incident

The DT will show advanced interactive design evolved from the conventional 3D map or geographic information system (GIS). The DT design will use various augmented graphics for warning such as a probable collision in a specific spatial position or detection of flight into no-fly zone. The low latency-weather information on DT could play a role in early warning information display (Riaz et al., 2023). Here again, since the use of 3D design and augmented graphics is the typical property of VR/AR/MR, the DT is expected to use VR/AR/MR technologies to complete their features.

3.1 Objective and requirement elicitation

This study aims to develop an MR-based 3D graphics monitoring system visualizing the optimal flight path of UAM with basic components of the UAM operational environment to demonstrate the concept of UAM operation. It shows the early phases of systems engineering processes for the UAM concept demonstrator development. Based on the HCI issues mentioned in the prior section, the author sets five system requirements from the HCI perspective in Table 1.

No. | Requirement |

Requirement 1 | The digital twin should show accurate 3D spatial information to proactively avoid a UAM vehicle's collision with building obstacles or other air vehicles. |

Requirement 2 | The DT should provide the 3D spatial information of the original flight plan, the actual flight path from the departure point to the current point, and the optimal flight path of a UAM vehicle considering the time and distance to the destination rapidly. |

Requirement 3 | The DT should be able to update the optimal flight path as the UAM vehicle's position deviates from the determined optimal flight path in real-time or near-real time. |

Requirement 4 | The DT user should be able to rapidly perceive and identify the 3D spatial information of the UAM vehicle if the vehicle's current movement causes an intrusion into the no-fly zone near an urban area. |

Requirement 5 | The DT should show an identifiable warning sign or graphics when it is imminent for a UAM vehicle to collide with a building obstacle or other air vehicles. |

Then the author conducted a system analysis to define and configure the system architecture.

3.2 System analyses

The author conducted system analyses considering human factors to develop the concept demonstrator that satisfied the five requirements. The system analyses enabled the function synthesis. This study conducted flight tests using rotary-type drones and obtained their flight trajectory coordinate data to generate the 3D flight paths in the MR application as simulated UAM operations. It applied the A-Star algorithm to determine the most optimal flight paths of the drone. The A-Star algorithm determines the shortest or fastest path from one spatial point to another exploring available logic among elemental paths in the grid environment. The author reserved a proper indoor space to simulate an urban environment after putting a cuboid object at the center and flying a drone around it. His software team developed an MR application to visualize the spatially synchronized 3D graphics of the cuboid object and drone. The MR users walked around the virtual 3D graphics to see all 360 degrees of graphical features. The developed application may provide the third person-view graphics supporting the high-level survey knowledge for spatial navigation. The high-level survey knowledge represents complex spatial insights about the navigation route from the allocentric standpoint (Chrastil and Warren, 2013).

The basic UAM operational environment is mostly airspace in and above urban and suburban areas. UAM vehicles will sometimes search for the airspace between buildings to fly. Avoiding the potential colliding with buildings is the necessary function in this condition. Around some cities, no-fly zones restrict or inhibit UAM vehicles when the vehicles intrude for security reasons. The PICs of UAM vehicles need to practice avoiding the no-fly zones as the critical mission. One typical deviation scenario is as follows: a UAM vehicle plans to fly to the left of a building. Due to the strong wind, it deviates significantly from the planned path shifting to the right. In this situation, flying to the right of the building instead of the left will save more time and battery life than returning to the planned flight path. This study applied similar scenarios to this one to demonstrate the UAM's flight. In these scenarios, the drone will search for the optimal flight path to the arrival point maintaining operational safety and flight economy. Yang et al. (2020) developed a DT 3D simulation application that generated specific drone coordinates for training around its indoor flight space. This study developed another DT 3D simulation application in a similar method in the MR platform.

3.3 System architecture

Figure 1 shows the system architecture of the MR monitoring demonstrator. The air mobility GPS sensors collected spatial flight data to generate the drone's flight path graphics. This data was the input of the data analytics engine that determined the optimal path. Three obstacle items (building, other traffic, and no-fly-zone) were created and interacted with the data analytics engine. The data analytics engine processed the A-Star algorithm to determine the planned and optimal flight path. The data analytics engine processed the visualization of planned, actual, and optimal flight paths.

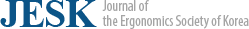

Figure 2 illustrates the conceptual sketch of the application of this study: an obstacle and planned / actual / recommended paths. While this concept picture draws the planned flight path in magenta color, the actual flight path (cyan line) depicts the path that the drone deviates from the planned path from the departure to the current position. The AI's recommendation draws the yellow line from the current position to the destination. The colors of planned and actual flight paths comply with the Federal Aviation Administration (FAA) design standards for electronic flight instrument design (Duven, 2014). The author selected a distinct color for the optimal path so that people easily tell the three important path information. The yellow or orange color is not included in the flight path design standard by the FAA or International Civil Aviation Organization (ICAO).

3.4 Drone coordinate data acquisition

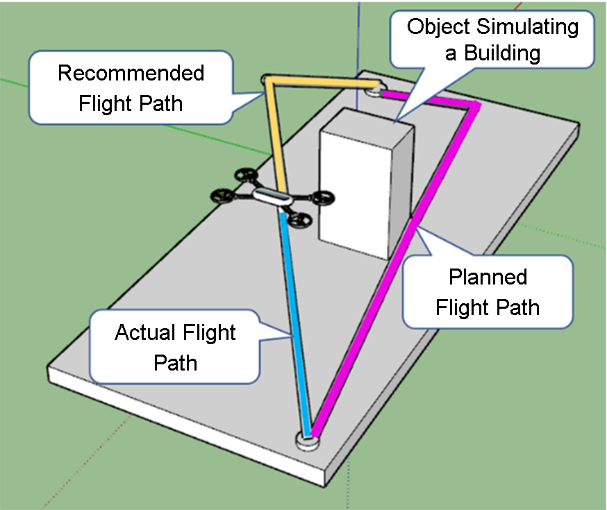

Figure 3 shows the indoor drone testing space. The space with a dimension of 3.3m×3.8m×2m was devoted to the drone flying area. A cuboid-shaped box stood in the middle of the space simulating a building. The devoted space defined a set of standard three-dimensional coordinates. The experimenter defined the (0, 0, 0) coordinate in the corner vertex and created the coordinates of the box obstacle thereof. This test used an indoor GPS using ultrasound sensors (Marvelmind Starter Set NIA-SmallDrone) to obtain the drone's flight coordinate data. The experimenter attached seven beacon sensors on two wall surfaces and the floor to communicate among the ultrasound sensors. One beacon sensor was attached to the drone. The drone (Figure 4: DJI Tello) flew to the left, right of, and above the box, and the test computer downloaded the drone's flight coordinate data via an internet connection. The distance from the departure to the destination point of flight was about 3.5 meters, and the drone flew below 1-meter height. The speed of the drone flight was 0.1~0.3 meters per second. The researcher extracted 150 sample position data of the drone in the distance per a single flight and repeated test flights. The drone coordinates have been transformed because the coordinate standard of the dynamic drone generated by the Marvelmind system differed from the static obstacle.

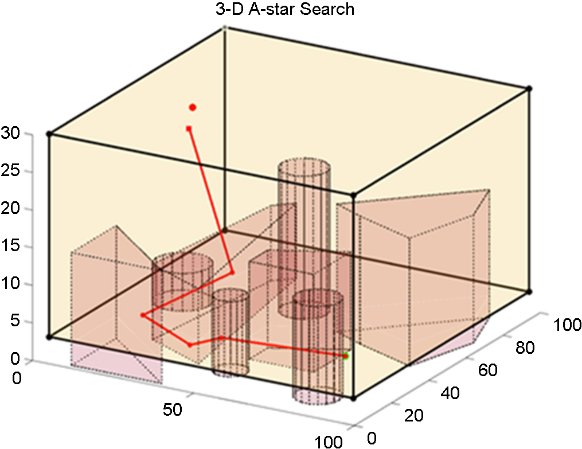

3.5 Implementation of an A.I. function to guide the optimal flight path

This study implemented an A-Star algorithm to determine the optimal flight paths in the 3D environment. Determining the optimal path is finding the minimum cost from the departure point to the current spatial position and the current spatial position to the destination point. The A-Star algorithm calculates the cost of moving the two flight routes, and the vehicle's total movement can be visually implemented in various computer programs. The lowest cost here implies the state of the shortest path or fastest time. This study defined the total minimum cost corresponding to the optimal distance when the experimenter intentionally moved the drone's position far from the original path. The algorithm worked in the 2D space defined by the two axes (x and y). The 3D A-Star search implemented the path of the total movement with the minimum cost in the 3D space defined by the three axes (x, y, and z), as seen in Figure 5. The algorithm expanded the space from 2D to 3D, visualizing the optimal flight path with the altitude component. The 3D navigation scenario prohibited direct vertical 90-degree movements from the departure to simulate the practical UAM navigation situation around buildings. In this test scenario, the experimenter defined the drone's gradual climbing up to the cruising altitude without complete vertical movement. The variable applied to the A-Star algorithm in this study was building obstacles. To implement higher safety for UAM, the system should include more external variables that may impact the vehicle's attitude such as other air vehicles or strong building wind.

3.6 Development of MR application

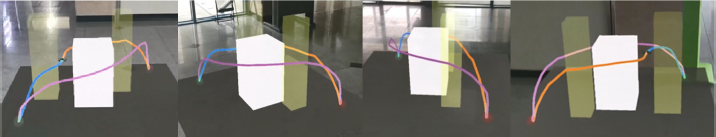

This study adapted Microsoft HoloLens 2 as the MR device. The HoloLens 2 users can see all the features of 3D graphics in the embedded MR application. After mapping between the real world and virtual space dimensions, the application created virtual 3D cuboid obstacle graphics implementing the 1:1 spatial synchronization with the test obstacle (a box, Figure 6). The application added the no-fly zone as a practically important component. The application defined the no-fly zone as "technically flyable, but it will be a violation if anyone flies into this zone." Figure 7 shows the graphical features of the developed MR application in pause mode so that the readers can see all dimensions of the application as the HoloLens user walks around it. The white cuboid is the simulated building. Two transparent yellow cuboid graphics are no-fly-zones. Although the capture shots in Figure 7 do not clearly show the vehicle's successful avoidance of obstacles due to ambiguity when people see 3D graphics at a certain angle (Line-of-sight ambiguity), the vehicle in this application avoided the three obstacles.

3.7 Internal test and evaluation

The author only conducted repetitive internal tests to verify if the demonstrator met the requirements. The requirement verification results are shown in Table 2.

Requirement No. | Requirement | Satisfied or not |

Requirement 1 | The digital twin user should be able to perceive accurate 3D spatial informationto proactively avoid a UAM vehicle's collision with building obstacles orother air vehicles.

| Satisfied

|

Requirement 2 | The DT user should be able to perceive the accurate 3D spatial information of | Satisfied |

Requirement 3 | The DT should be able to update the optimal flight path as the UAM vehicle's position deviates from the determined optimal flight path in real-time or | Satisfied |

Requirement 4 | The DT user should be able to rapidly perceive and identify the 3D spatial information of the UAM vehicle if the vehicle's current movement causes | Satisfied |

Requirement 5 | The DT should be able to show an identifiable warning sign or graphics | Not satisfied |

The DT graphics depicted the designed properties for the 3D shape and color. The application implemented the flight paths based on the drone test coordinate data. The project team implemented the Unity (i.e., the 3D game engine used to develop MS HoloLens application) codes of the A-Star algorithm to make the drone flight scenarios visualized in the HoloLens 2 application. The HoloLens MR application visualized the original planned, actual, and recommended optimal flight path in different color coding. The planned path (magenta line) is the desired path that the pilot planned from departure to arrival. It is also the path applying the A-Star algorithm with no external variable in the test application. The recommended optimal path (orange line) is the path applying the A-Star algorithm adding external variables. The application dynamically drew this path when the vehicle deviated from the planned path line. This study assumed a strong wind variable that horizontally shifted the drone to create the actual paths.

The application visualized the dynamically updated recommended optimal flight path processed by the A-Star algorithm as the air vehicle flight progressed to the destination deviating from the planned path. Figure 8 shows the continuous update of the orange line (the recommended optimal path) as the air vehicle (green cube) flies from the departure to the destination points deviating from the magenta line (the planned path).

The 3D holograms showed its 360-degree features well while the team conducted internal tests. The drone's intrusion into the no-fly zone was also easily recognized while the team properly moved around the graphics to see at a better angle. However, the team could not complete a function (requirement 5) due to the limitation of the planned project schedule: measuring the distance between vehicles to obstacles to determine the collision risk. This study only implemented the DT graphics based on the test data with a single drone.

4.1 Verification & validation of requirement satisfaction

The effectivenesses of the MR-based UAM DT demonstrator found during the verification of requirements are as follows.

4.1.1 Requirement 1 (The digital twin's capability to perceive accurate 3D spatial information to proactively avoid a UAM vehicle's collision with building obstacles or other air vehicles)

The user should move around the 3D graphics to see all the spatial aspects because the DT graphics can always fix their orientation in the real place. Compared with the 3D graphics on the 2D screen, the user can interact with the DT keeping their orientation.

4.1.2 Requirement 2 (The DT's capability of supporting the accurate 3D spatial information of the original flight plan, the actual flight path, and the optimal flight path rapidly)

Pilots always estimate their planned and modified 3D flight routes because it is impossible to see the 3D flight routes in real airspace with their bare eyes. Recent computer technology can develop the augmentation of the original and optimal 3D routes from the first-person view in the cockpit (a synthetic vision in the head-down display and a head-up display) and the third-person view (the DT graphics). The remote pilots are expected to be the DT user group rather than the onboard pilots. The first-person view methods still may be necessary for the first-generation UAM aircraft while they require onboard pilots. However, the DT may be considered the crucial system when the UAM infrastructure realizes pilotless aircraft in the future.

4.1.3 Requirement 3 (The DT's capability of updating the optimal flight path as the UAM vehicle's position deviates from the determined optimal flight path in real-time or near-real-time)

While the DT demonstrator could rapidly update the optimal path, the drone test of this study did not realize the real-time downloading of the flight coordinate. It needs more investigation and cost to implement it in the next phase. The real-time optimal route determination will be one of the main A.I. functions of the UAM systems. Implementation of this function requires real-time identification in the seamless GPS signal environment of urban areas. However, high-story buildings and tunnels in big cities often create regions where the GPS signal becomes weak or disappears. As an alternative 3D positioning method, the UAM system can integrate the UAM aircraft into the data communication environment. The current 5G environment only can detect the flying object up to 120 meters. Since the usual UAM operational altitude will be between 300 and 600 meters, the highly reliable combined GPS and data communication environment for UAM positioning will be available after the construction of the 6G network. Until then, the research on the DT using the miniature building and UAM vehicles will be available in the 5G environment under a 120-meter altitude.

The algorithm used in this study was a very basic optimal path algorithm in which the conventional A-Star algorithm expands from the 2D to 3D environment. Kim et al. (2022) showed that the A-Star algorithm could optimize the GA aircraft flight route.

4.1.4 Requirement 4 (The DT's capability of supporting to rapidly perceive and identify the 3D spatial information of UAM vehicle if the vehicle's current movement causes an intrusion into a no-fly zone near an urban area)

The DT will effectively detect the intrusion into the no-fly zone. Current GA pilots plan and judge their flyable airspace using 2D-based maps (paper charts and electronic maps) with altitude information. It is expected to encounter the no-fly zone more frequently when flying above urban airspace. Users may judge the intrusion into the no-fly zone more intuitively using the 3D holograms than the 3D graphics on the 2D screens.

4.1.5 Requirement 5 (The DT's capability of showing an identifiable warning sign or graphics when it is imminent for a UAM vehicle to collide with a building obstacle or other air vehicles)

Although the team did not accomplish this function during this study, the author verified that computer programming could sufficiently implement this requirement. The required condition for implementing this function is obtaining real-time drone position data acquisition. The author conducted another indoor drone test with two drones after completing this study. He produced highly probable vehicle-to-vehicle (V-V) situations around a building obstacle (Figure 9). The DT will be able to produce the graphical indication of collision danger when the distance of 3D positions between the vehicles is within a certain value. Using this function, the DT will be able to test the identification of collision danger of V-V and V-O (vehicle to obstacle) even without distance measuring sensors (e.g., LiDAR). When the DT implements this function, the remote UAM traffic managers and pilots will save a high workload burden of proactively avoiding the collision. Implementation of this function will need the UAM vehicle's updated coordinate data in real-time, and this data will be input for the AI-based collision prediction. The DT also needs a visualization of warning graphics if the vehicle intrudes a no-fly zone.

Additional HCI issues found during the development of the DT demonstrator are as follows.

4.2 VR, AR, and MR technologies considering the first-person view vs. the third-person view, human perception of 3D hologram

Implementing DT with VR, AR, and MR needs different designs considering their characteristics and the user's visual perspective. Ke et al. (2019) analyzed four properties of VR/AR/MR technologies: immersive, interactivity, multi-perception, and key technologies to consider which technology is the best for DT applications. Although there is no absolute answer on what technology should be applied to a specific DT purpose, VR may be effective for the first-person view application, while AR and MR may be effective for the third-person view application considering the user's freedom of movement for interaction. VR users may feel uneasy when walking around for the interaction with the DT because blocking from or limited recognition of the real world is necessary for the VR. The VR users may prefer interacting with the DT without walking around the 3D graphics while immersed in the first-person view application. The MR users may prefer walking around the 3D DT graphics for interaction. Oh et al. (2021) integrated VR and MR applications for an aircraft pilot training system to apply their advantages of first-person and third-person views.

Situation awareness with DT may be complemented when multiple persons watch a common scene from different third-person views or the combined first-person and third-person views. When one person watches a vehicle's movement from behind into the back of a building, the spatial information that he or she takes may slightly differ from the person who watches the same movement from the front side of the vehicle.

Cowen (2001) and Alexander (2005) discovered that the 3D graphics in the 2D screen could be even worse than the 2D graphics for the situation awareness of flight situations. 3D graphics unavoidably causes Line-of-sight ambiguity in a certain viewing angle. An example of it is the error in the dynamic depth perception when the aircraft moves in the same direction as the viewing angle. The studies showed a display of a 2D map coupled with a separate vertical situation display had more benefits for situation awareness in practice (Cowen, 2001; Alexander, 2005). However, the users in those studies should passively perceive the 3D graphics without full flexibility in controlling the viewing angle. The demonstrator in this study employs a more flexible interaction technique to perceive the 3D situation. In the MR environment, users have the freedom of choosing all viewing angles of the 3D object of interest. They need to move around to see the different dimensions of the object. The performance of the depth perception in the MR environment thereof can be different from the results of the previous studies. To find the more obvious difference, human-MR interaction techniques need to be developed. The 3D flight path graphics (planned, actual, optimal flight paths) in the MR environment may provide the predictive information of the UAM navigation.

4.3 Integration of additional sensor data to digital twin

`The DT will be capable of accurately integrating additional devices or facilities planned to install in certain locations. It will be another main effectiveness of the DT in planning and verifying the UAM operational environment. The DT will be able to augment safety-critical objects of planned items, such as electric wires, to the existing environment to proactively identify the spatial variables of UAM operations. Weather-related variables such as valley wind between buildings also may seriously impact the UAM operations. Augmenting the intensity and direction signals of the valley wind will be another effective function of the DT. This function will save the pilot's workload and make the pilot focus on the wind impact on their UAM vehicles' attitude and stability.

4.4 Limitation of the demonstrator

The current version of MS HoloLens 2 does not fully support this study's hardware requirements. The demonstrator of this study worked okay because it was a simple software program. However, when predicting the software program with more sophisticated features and graphical components, the speed of the CPU and the memory quantity are not sufficient. According to the author's investigation, there is no alternative electronics option to satisfy this study's ultimate design specification fully. The specifications of MR/Goggle devices should be at higher levels to fully satisfy the planned MR application designed for the next phase of this study.

4.5 Next phase of this study and its application for UAM systems

The author plans to upgrade the DT demonstrator that visualizes multiple drones' planned /actual /optimal flight paths in the HoloLens application, augmented devices/facilities, and implement the real-time downloading of drone coordinates flying outdoor locations around dummy buildings in a test site. He also plans to show more sophisticated variables, such as weather, wind, or conflicts with other air vehicles' original paths in the next-generation DT model. The next phase will evaluate the situation awareness using human-in-the-loop simulation tests with the 3D DT demonstrator allowing naturalistic voluntary human actions to see 3D scenes, compared with the 3D graphics in the fixed 2D screen and a 2D map only. The DT design concept of this study can create the standards of medium and configurations for the UAM traffic monitoring system.

This study conducted systems engineering processes to design, develop, and test an MR-based DT for the UAM traffic monitoring system. From the HCI and human factors perspectives, the DT's capability of delivering accurate spatial information with low latency and the user's flexibility to see all dimensions of 3D graphics at any time may be benefits for UAM operations. The DT may support monitoring UAM vehicles' 3D movements and avoiding potential collisions against building and air traffic obstacles. By augmenting more information relevant to UAM operations, the DT may have more utilities in predicting the operational status and safety levels.

References

1. Alexander, A.L., Three-dimensional navigation and integrated hazard display in advanced avionics: Performance, situation awareness, and workload. Technical Report AHFD-05-10/NASA-05-2, Hampton, VA, NASA Langley Research Center, 2005.

Google Scholar

2. Barabas, J. and Bove, V.M., Visual perception and holographic displays. In Journal of Physics: Conference Series, 415(1), 2013.

Google Scholar

3. Bourgois, M., Cooper, M., Duong, V., Hjalmarsson, J., Lange, M. and Ynnerman, A., Interactive and immersive 3D visualization for ATC. In USA/Europe Seminar on Air Traffic Management Research and Development, (pp. 303-320), Washinton, DC. 2005.

4. Caldwell, B.S., Johnson, M.E., Whitehurst, G., Rishukin, V., Udo-Imeh, N., Duran, L., Nyre, M.M. and Sperlak, L., Impact of Weather Information Latency on General Aviation Pilot Situation Awareness. In 18th International Symposium on Aviation Psychology, (pp. 123), Dayton, OH, 2015.

Google Scholar

5. Chrastil, E.R. and Warren, W.H., Active and passive spatial learning in human navigation: Acquisition of survey knowledge. Journal of Experimental Psychology: Learning, Memory, and Cognition, 39(5), 1520-1537, 2013, https://doi.org/10.1037/a0032382

Google Scholar

6. Cowen, M.B., Perspective view displays and user performance. KRC & VEL (Eds.), Biennial Review. 2001.

Google Scholar

7. Duven, J.E., Electronic Flight Displays, Federal Aviation Administration (FAA) Advisory Circular (AC) AC 25-11B, 2014.

8. EASA, Study on the societal acceptance of Urban Air Mobility in Europe. 2021, https://www.easa.europa.eu/sites/default/files/ dfu/uam-full-report.pdf (retrieved May 1, 2023).

9. Federal Aviation Administration, Concept of Operations v1.0 Fundamental Principles Roles and Responsibilities Scenarios and Operational Threads, 2020, https://nari.arc.nasa.gov/sites/default/files/attachments/UAM_ConOps_v1.0.pdf (retrieved May 1, 2023).

10. Greenfield, I., Concept of Operations for Urban Air Mobility Command and Control Communications (NASA/TM-2019-220159), 2019, https://ntrs.nasa.gov/api/citations/20190002633/downloads/20190002633.pdf (retrieved May 1, 2023).

11. Ke, S., Xiang, F., Zhang, Z. and Zuo, Y., An enhanced interaction framework based on VR, AR, and MR in digital twin. Procedia CIRP, 83 (pp. 753-758), Zhuhai, Hong Kong, China, 2019.

Google Scholar

12. Kim, J., Justin, C., Mavris, D. and Briceno, S., Data-driven approach using machine learning for real-time flight path optimization. Journal of Aerospace Information Systems, 19(1), 3-21, 2022.

Google Scholar

13. Lascara, B., Lacher, A., DeGarmo, M., Maroney, D., Niles, R. and Vempati, L., Urban air mobility airspace integration concepts. The MITRE Corporation, 2019.

Google Scholar

14. Lee, K.B. and Kim, C.G., Study on "Digital Twin technology utilization" for "design and operation" of airway for UAM. The Proceedings of KSAS 2020 Fall Conference, (pp. 697-698), Jeju, Republic of Korea, 2020.

15. Ma, X., Tao, F., Zhang, M., Wang, T. and Zuo, Y., Digital twin enhanced human-machine interaction in product lifecycle. Procedia CIRP, 83 (pp. 789-793), Zhuhai, Hong Kong, China, 2019.

Google Scholar

16. Mathur, A., Panesar, K., Kim, J., Atkins, E.M. and Sarter, N., Paths to Autonomous Vehicle Operations for Urban Air Mobility. In AIAA Aviation 2019 Forum, (p. 3255), 2019, https://doi.org/10.2514/6.2019-3255 (retrieved April 25, 2023)

17. Oh, C., Lee, K. and Oh, M., Integrating the First Person View and the Third Person View Using a Connected VR-MR System for Pilot Training. Journal of Aviation/Aerospace Education & Research, 30(1), 21-40, 2021, https://doi.org/10.15394/jaaer.2021.1851

18. Riaz, K., McAfee, M. and Gharbia, S.S., Management of Climate Resilience: Exploring the Potential of Digital Twin Technology, 3D City Modelling, and Early Warning Systems. Sensors, 23(5), 2659, 2023.

Google Scholar

19. Singh, M., Fuenmayor, E., Hinchy, E.P., Qiao, Y., Murray, N. and Devine, D., Digital twin: origin to future. Applied System Innovation, 4(2), 36, 2021.

Google Scholar

20. Tavanti, M., Le, H.H. and Dang, N.T., Three-dimensional stereoscopic visualization for air traffic control interfaces: a preliminary study. In Digital Avionics Systems Conference, 2003. DASC'03. The 22nd, 1 (pp. 5.A.1-1, 1-7) Indianapolis, IN., 2003.

Google Scholar

21. Tu, X., Autiosalo, J., Jadid, A., Tammi, K. and Klinker, G.A., Mixed Reality Interface for a Digital Twin Based Crane. Applied Sciences, 11(20), 9480, 2021.

Google Scholar

22. Welchman, A.E., The human brain in depth: how we see in 3D. Annual Review of Vision Science, 2, 345-376, 2016.

Google Scholar

23. Yang, Y., Meng, W. and Zhu, S., A Digital Twin Simulation Platform for Multi-rotor UAV. In 2020 7th International Conference on Information, Cybernetics, and Computational Social Systems (ICCSS), (pp. 591-596), 2020.

Google Scholar

PIDS App ServiceClick here!