eISSN: 2093-8462 http://jesk.or.kr

Open Access, Peer-reviewed

eISSN: 2093-8462 http://jesk.or.kr

Open Access, Peer-reviewed

Kewei Zhang

, Younghwan Pan

10.5143/JESK.2025.44.2.95 Epub 2025 May 02

Abstract

Objective: The aim of this study is to investigate the key user experience factors between graphical user interface (GUI) and conversational user interface (CUI) in human-vehicle interaction systems.

Background: Vehicle interface systems have evolved significantly over the past few decades. Early systems primarily relied on graphical interfaces, using touchscreens, physical buttons, and knobs to control functions such as navigation, climate, and media. However, with the increasing development of artificial intelligence (AI) and voice recognition technologies, vehicle manufacturers have introduced intelligent dialogue systems that allow for voice-driven control. These intelligent systems are seen as a promising solution to enhance safety, usability, and convenience by reducing cognitive load and manual distractions for drivers. Despite this evolution, systematic usability evaluation between these two interaction paradigms remain limited. Investigating the characteristics, key user factors, and challenges of GUI and CUI systems is critical for improving human-vehicle interaction design.

Method: A systematic literature review was conducted to identify and analyze studies on user experience factors interaction devices, and evaluation methods related to both graphical and voice-based interactions in vehicle interface systems. Research articles from 2014 to 2024 were included in this review. A total of 43 studies were selected and analyzed.

Results: This literature review identified and categorized key user experience factors for both graphical and intelligent dialogue interfaces in vehicle systems. While graphical interfaces emphasize usability, efficiency, learnability, responsiveness, cognitive load and safety. Conversational interfaces are focused on naturalness, efficiency, responsiveness, usability, accuracy of recognition, trust, safety and anthropomorphism. The review highlighted the importance of considering shared user experience factors in vehicle interface design.

Conclusion: This study systematically identified and analyzed key user experience factors of GUI and CUI in human-vehicle interaction. While GUI offers a robust platform for visually-intensive tasks, VUI introduces a more natural and conversational interaction model. The findings highlight the need for hybrid systems that integrate the strengths of both interaction paradigms to improve user experience. However, the studies reviewed did not provide extensive performance metrics for all interaction types, suggesting a need for further research that includes standardized evaluation criteria across different scenarios.

Application: The findings from this study can inform the design and evaluation of vehicle interface systems, guiding manufacturers in the development of both graphical and intelligent dialogue systems. Understanding the key factors will assist in creating interfaces that are more intuitive, efficient, and satisfying for drivers, ultimately contributing to improved usability in modern vehicles.

Keywords

Human-vehicle interaction Graphical User Interface (GUI) Conversational User Interface (CUI) User experience factors

In recent years, advancements in cutting-edge technologies such as autonomous driving, artificial intelligence (AI), and 5G connectivity have profoundly influenced the automotive industry. According to IHS Markit, the global market for intelligent vehicle cockpits is projected to reach $68.1 billion by 2030. Modern vehicles are increasingly integrating features like advanced driver-assistance systems (ADAS) and intelligent conversational assistants, making driving experiences more efficient, seamless, and engaging (Garikapati and Shetiya, 2024). Intelligent human-vehicle interaction system is the key to future automotive development and the evolution of in-Vehicle Human-Machine Interface (iHMI) has been pivotal in redefining how users engage with vehicles (DanNuo et al., 2019). Traditional interaction paradigms, dominated by physical controls such as buttons, dials, and touchscreens, are gradually giving way to more technical-driven systems powered by motion capture and speech recognition technologies (Tan et al., 2022).

GUI offers an information-intensive visual interaction method, while CUI relies on voice commands to enable hands-free operation. This transition from traditional visual-driven interfaces to speech-driven systems introduces new opportunities and challenges for user experience which is also the focus of human-vehicle interaction design research (Li et al., 2021). However, existing research primarily focuses on the independent exploration of GUI or CUI technologies and performance (Chen et al., 2024; Jianan and Abas, 2020), but lacks systematic studies on their usability analysis.

This study searches and integrates the literature in related fields to explore the user experience factors of GUI and CUI in driving scenarios. The focus is to analyze their characteristics from the in-vehicle interactive interface perspective, and extract the key UX factors. A clear understanding of the user experience factors that should be considered in GUI and CUI's interaction system supports the optimization of user experience in the next generation of in-vehicle interaction systems, and these factors can be applied as scales in future iHMI interaction evaluation studies. The following detailed research questions were set to achieve the research objectives.

RQ1: What research are GUI and CUI currently conducted from a user experience perspective?

RQ2: What are the key UX factors that influence GUI and CUI?

For the research question 1, the theoretical background to this investigation arises from Human-Computer Interaction (HCI) theory, which suggests that user satisfaction is significantly impacted by how well a system aligns with user expectations in terms of usability, efficiency, and cognitive load (Norman, 2013). This shift toward GUI to CUI represents a frontier in human-vehicle interaction research, as the new form of interface paradigm addresses new user needs. The necessity of examining both systems from a UX perspective lies in identifying the distinct challenges, and the method is to sort out and summarize the existing literature research. Addressing this gap in the literature is critical, as current studies often examine these interfaces independently, limiting the insights on how they may complement one another within the same vehicle system.

For the research question 2, user experience (UX) is an important consideration in the design and evaluation of interactive systems, especially in high-risk environments such as driving. In the context of vehicular interfaces, a growing body of literature identifies various factors that influence the effectiveness of GUIs and CUIs, such as usability, responsiveness, safety, and efficiency (Farooq et al., 2019). However, there is still a lack of comprehensive understanding of the key user experience factors that influence both interfaces in vehicle systems. Finding key user experience factors through a combination of qualitative and quantitative research methods can help maximize the user experience by seamlessly integrating graphical and conversational user interfaces. In addition, understanding these factors will allow for the development of more standardized evaluation criteria for future in-vehicle systems, which will be critical for comparative performance evaluations across platforms and technologies.

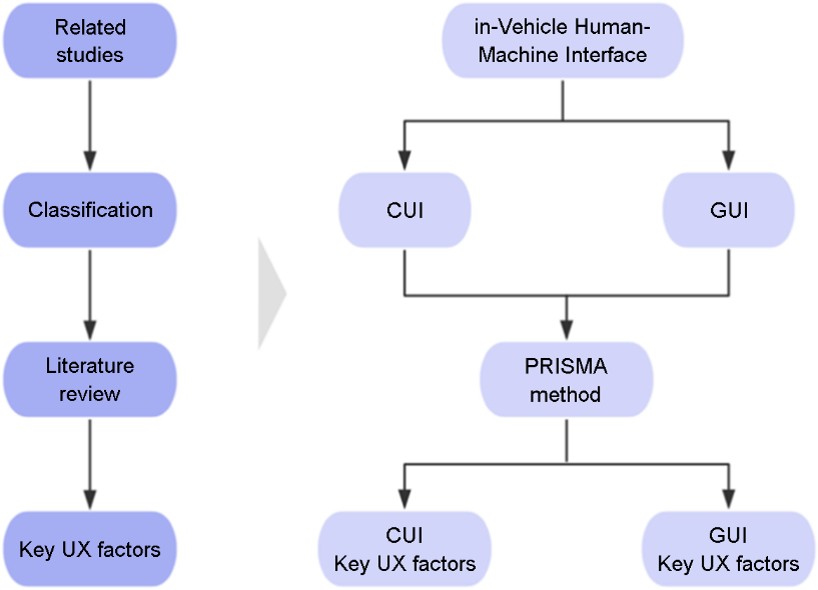

This study employed the following research methodology (Figure 1). First, based on the work of Ruijten et al. (Ruijten et al., 2018), intelligent vehicle interfaces were categorized into GUI (Graphical User Interface) and CUI (Conversational User Interface). This categorization was used to determine the relevant database search keywords.

Subsequently, a systematic literature review was conducted using the PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) methodology, a widely cited framework that provides a checklist of essential items for reporting systematic reviews and meta-analyses (Moher et al., 2009). The review focused on three major international academic databases: ACM Digital Library, Web of Science (WOS), and IEEE Xplore. ACM and IEEE Xplore include specialized journals on human-computer interaction, providing extensive resources on human-vehicle interaction, while WOS covers broader research in user experience design as a supplement. The search query was constructed using Boolean operators, combining keywords from three categories: GUI, CUI, and IHMI (keywords listed in Table 1).

|

Context |

Keywords |

|

GUI |

"In-Vehicle graphical user interface" OR

"In-Vehicle visual user interface" |

|

CUI |

"In-Vehicle conversational user interface" OR

"In-Vehicle speech-based Interface" |

|

IHMI |

"In-Vehicle

human-machine interface" OR "IHMI" OR "human vehicle

interface" OR "Driver-Vehicle

Interaction" OR "in-vehicle interaction" |

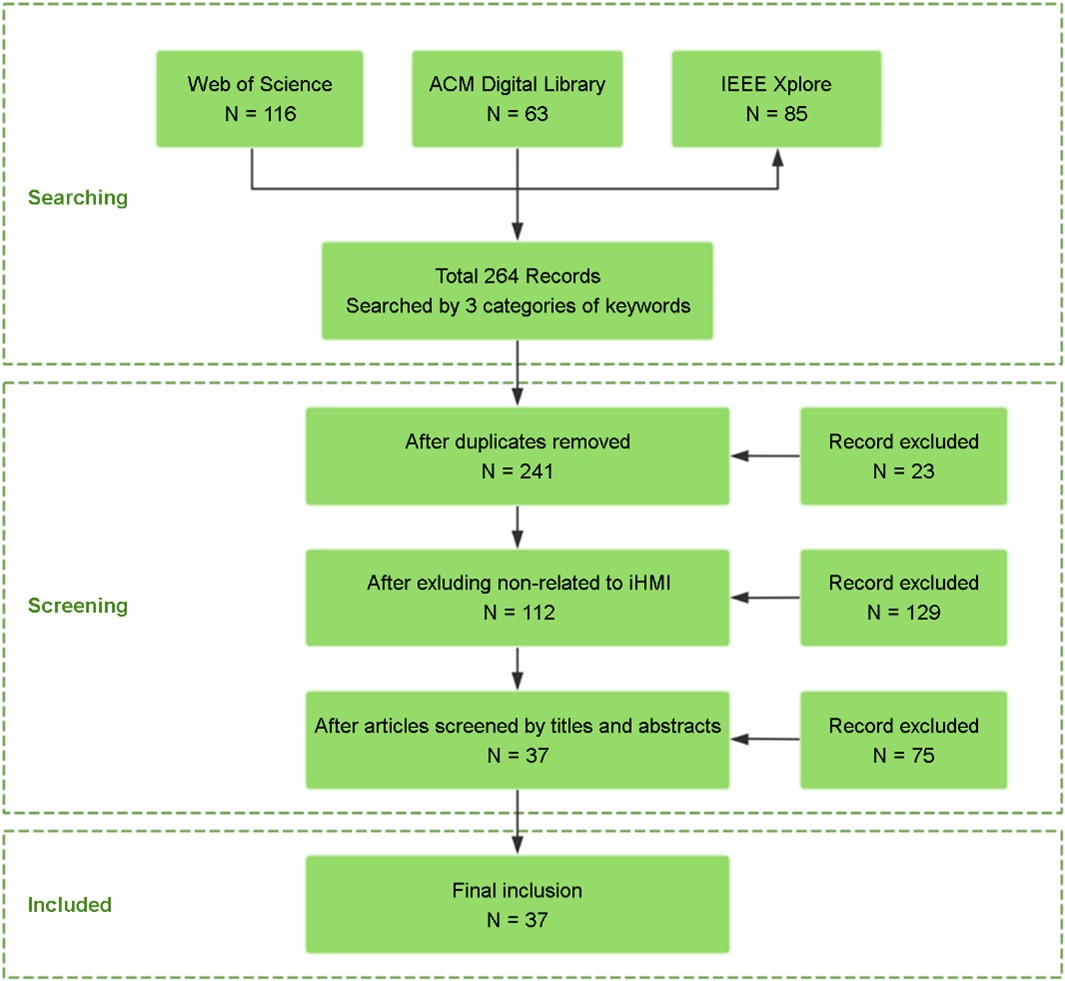

The search was conducted in December 2024. The process of paper retrieval and selection is illustrated in Figure 2. A total of 264 papers were initially collected, including 116 from Web of Science, 63 from ACM Digital Library, and 85 from IEEE Xplore. Only research articles were included, excluding conference reports, review papers, and workshop proposals. After removing 23 duplicate papers, only studies focusing on IHMI (In-Vehicle Human-Machine Interface) systems were retained, excluding those related to eHMI (External Human-Machine Interface), resulting in 112 eligible papers. These 112 papers were further screened based on their titles and abstracts. Articles irrelevant to human-computer interaction and user experience—such as those focusing solely on algorithms, software, or toolkits—were excluded, leaving 37 papers that met all inclusion criteria for detailed review.

3.1 RQ1 Analysis of related research

To address Research Question 1, we conducted a systematic literature review. Referenced on the study from Kim et al. (2015), which classified iHMI (In-Vehicle Human-Machine Interface) from the perspective of UX, this paper categorizes GUI interaction types into three categories: physical buttons, touch screens, and gestures, a total of 27 studies in the three categories. The CUI interaction is represented by speech, a total of 13 studies in the three categories. Three of the articles contain studies of both GUI and CUI interactions.

3.1.1 Graphical interface

The graphical interface in in-vehicle systems refers to the visual display of information to drivers, using icons, text, colors, and other graphical elements as visual cues. These interfaces provide intuitive and efficient prompts for information and operations (Gao et al., 2024). The core objective of such interfaces is to visually communicate vehicle status and operational feedback to drivers, allowing them to interact via screens, HUDs, or physical controls (Tan et al., 2022). In recent years, graphical interfaces have expanded their applications to areas such as dynamic information display (Trivedi, 2007), multitasking management (Harrison and Hudson, 2009), and user behavior feedback (Brouet, 2015). They demonstrate significant advantages in enhancing drivers' cognitive efficiency and operational accuracy (Jansen et al., 2022). However, potential cognitive load remains a key challenge, especially in high-dynamic environments where drivers frequently shift their attention. This could result in operational errors or distractions (Beringer and Maxwell, 1982). Therefore, the impact of graphical interfaces on safety is a critical aspect of user experience in human-vehicle interaction. This study focuses on three main GUI interaction modes: physical buttons, touch screens, and gestures.

Physical button

Physical buttons, a long-established form of interaction in in-vehicle systems, are characterized by their simple structure, reliable operation, and highly intuitive tactile feedback (Morvaridi Farimani, 2020). This traditional interaction method has demonstrated excellent applicability in driving environments, particularly in scenarios requiring quick actions or low attentional demands (Beringer and Maxwell, 1982). Research indicates that physical button design should emphasize functional modularization and interface simplification, enabling drivers to perform operations quickly with limited attention. For example, arranging frequently used buttons within natural reach of the steering wheel or central console can significantly reduce operation time and attention shifts (Harrison and Hudson, 2009).

However, the static nature of traditional physical buttons limits their functional scalability, making it challenging to meet the complex demands of modern in-vehicle interaction systems (Yan and Xie, 1998). To address this challenge, recent advancements in dynamic button technologies have emerged. These integrate tactile feedback modules into visual displays, enabling real-time layout adjustments based on task requirements, thereby enhancing interface flexibility and adaptability (Jansen et al., 2022). For instance, designs incorporating deformable buttons can simulate the tactile sensation of physical buttons, allowing users to experience realistic feedback during screen operations, effectively merging the functions of touch screens and traditional buttons (Fumelli et al., 2024). Furthermore, Brouet's research indicates that physical buttons exhibit shorter response times in emergency scenarios (Brouet, 2015). In high-speed driving or complex traffic environments, drivers can quickly activate emergency braking buttons, ensuring greater safety. Although physical buttons have a low learning curve, making them easier for drivers to master, excessive button design can lead to interface clutter, increasing cognitive load and the risk of operational errors (Zaletelj et al., 2007). Therefore, physical button layouts should align with drivers' natural motion habits, such as placing frequently used buttons within the driver's natural arm extension range. Additionally, secondary functions should include appropriate visual indicators and feedback to support rapid operations (Yeo et al., 2015).

Touch based interaction

Touch screen is a graphical representation of buttons that can be resized to fit the screen space (Lee and Zhai, 2009). With technological advancements, this highly efficient interaction method has been applied to functions such as navigation, multimedia, and climate control, becoming a mainstream iHMI interaction method in the past decade (Zhang et al., 2023).

The core advantage of touchscreens lies in their ability to offer highly customizable interfaces, enabling users to perform various tasks through single or multi-touch gestures, such as zooming maps, swiping menus, and selecting options. This intuitive interaction significantly reduces the learning curve for users (Talbot, 2023). Touchscreens can display layered interfaces to present various types of information, optimizing multitasking management in driving environments. For instance, navigation information, vehicle status, and entertainment system data can be displayed simultaneously, allowing drivers to quickly access critical data (Mandujano-Granillo et al., 2024). Multitouch technology is a notable innovation for touchscreens, enabling complex tasks through gesture recognition. For example, users can adjust map scales with two-finger zooming or switch playlists with swipe gestures, substantially enhancing operational efficiency and user experience (Leftheriotis and Chorianopoulos, 2011).

While touch-based interfaces have advantages such as simplicity, rich information display, and entertainment value over traditional physical buttons (Large et al., 2019), their use for common secondary vehicle controls and infotainment services often demands excessive visual attention. This may adversely affect driving performance and vehicle control, increasing risks for drivers and other road users. Therefore, the design of touch screens is important for user safety. Bae et al. (2023) indicated that the larger touch buttons and higher screen positions in in-vehicle information systems reduce distraction and improve performance.

In comparison to physical buttons, touchscreens lack tactile feedback, leading to decreased task performance and increased cognitive load as drivers must visually confirm their actions more frequently (Duolikun et al., 2023; Ferris et al., 2016; Wang et al., 2024). To address this issue, researchers propose integrating tactile feedback technology into touchscreens. For example, embedding deformable buttons or vibration cues into the touchscreen surface can provide realistic tactile feedback during operation, reducing visual dependency (Harrison and Hudson, 2009). Furthermore, Sharma et al. suggest integrating augmented reality (AR) technology into in-vehicle touchscreens to overlay navigation and other data directly onto the interface, significantly enhancing information accessibility and intuitiveness (Sharma et al., 2024). This integration aims to improve drivers' situational awareness, interaction quality, and overall driving experience. However, AR interface design should prioritize clarity and relevance to ensure that the displayed information does not overwhelm or distract drivers. This includes careful consideration of visual elements, such as boundary shapes and symbols for object detection and navigation (Merenda et al., 2018).

Gesture based interaction

Gesture-based interaction aims to achieve more intuitive, natural, and direct interactions while reducing visual distractions and enhancing safety. By leveraging machine vision or sensor technologies to capture drivers' hand movements, gesture-based systems facilitate touch-free interaction, effectively minimizing physical contact with the interface. Various technologies have been explored to achieve these goals. Lee et al. introduced a steering wheel finger-extension gesture interface combined with a heads-up display, increasing emergency response speed by 20% (Lee and Yoon, 2020). This system allows users to control audio and climate functions while keeping both hands on the steering wheel. Another interface method proposed by Lee et al. integrates gesture control with heads-up displays, projecting frequently used audio and climate controls from the central console onto a heads-up display menu (Lee et al., 2015). Drivers can operate these controls using specific hand gestures while maintaining their grip on the steering wheel. This approach effectively addresses operational failures or accidental triggers when drivers' hands are slippery or gloved. D'Eusanio et al. (2020) developed a natural user interface (NUI) based on dynamic gestures captured using RGB, depth, and infrared sensors. This system is designed for challenging automotive environments, aiming to minimize driver distraction during operation.

For gesture recognition systems, accuracy and reliability remain significant technical challenges. Complex backgrounds, varying lighting conditions, and environmental interference can reduce sensor accuracy, resulting in misrecognition or non-responsiveness (Kareem Murad and H. Hassin Alasadi, 2024). Differentiating true user intent from accidental actions remains a critical obstacle (Li et al., 2024). The selection of sensors, particularly visual sensors, plays a pivotal role in system performance and design (Berman and Stern, 2012). To address these challenges, researchers are exploring adaptive models and personalized approaches to improve the accuracy and stability of gesture recognition (Li et al., 2024). For instance, Čegovnik and Sodnik (2016) proposed a prototype recognition system based on LeapMotion controllers for in-vehicle gesture interactions. Additionally, advancements in hand modeling, feature extraction, and machine learning algorithms continue to enhance the functionality of gesture recognition systems (Kareem Murad and H. Hassin Alasadi, 2024).

3.1.2 Conversational user interface

The development of conversational user interfaces (CUI) in HMI aims to deliver a safer, more intuitive, and connected driving experience. CUIs enable drivers to perform hands-free and voice-controlled interactions for navigation (Large et al., 2019), infotainment (Jakus et al., 2015), and diagnostics (Ruijten et al., 2018), thereby reducing cognitive load and enhancing safety. According to Large et al. (2019), CUI equipped with AI-powered conversational agents offering empathy and a sense of control during autonomous driving journeys are particularly effective in building trust and improving user experience. With advancements in AI and its deeper integration into human-vehicle interaction systems, CUIs are anticipated to become indispensable tools for bridging the gap between humans and autonomous driving systems, leveraging their human-centric adaptive designs (Bastola et al., 2024).

Speech based interaction

Speech interaction is the most widely adopted auditory interaction mode in automotive HMI systems. Rooted in natural language processing (NLP) technology, it facilitates seamless communication between drivers and vehicle systems via voice commands (Politis et al., 2018). Compared to visual interfaces, the primary advantage of speech interaction lies in eliminating the need for manual operations and visual attention, significantly reducing cognitive load during driving (Murali et al., 2022). For instance, drivers can directly adjust navigation routes, control multimedia playback, or make phone calls via voice commands, enhancing operational efficiency and driving safety (Mandujano-Granillo et al., 2024).

Driven by AI, conversational human-vehicle interactions have become increasingly natural and human-like. Ruijten et al. (2018) indicates that conversational interfaces mimicking human behavior can significantly boost trust and acceptance of autonomous vehicles. Users often prefer interfaces with confident and human-like characteristics, which enhance their overall journey experience and sense of control. Integrating generative AI tools to create empathetic user interfaces that understand and respond to human emotions further strengthens the interaction between users and vehicles. This approach aims to make autonomous driving more convenient and enjoyable by designing context-responsive systems that cater to users' emotional states (Choe et al., 2023).

However, speech interaction also has limitations. Studies highlight noise interference and speech recognition errors as major issues. For example, environmental noise in high-speed driving or busy urban streets can disrupt voice commands, leading to unresponsive or erroneous system responses (Sokol et al., 2017). Additionally, variations in drivers' linguistic habits, accents, and speech speeds may affect the system's comprehension capabilities (Jonsson and Dahlbäck, 2014). Stier et al. (2020) reveals differences in speech patterns and syntactic complexity between human-human and human-machine interactions under varying driving complexities. To enhance the efficiency and safety of in-car speech interactions, developing adaptive speech output systems that consider individual user needs, personality traits, and contextual demands is crucial for optimizing user experience. To address these challenges, researchers propose speech recognition models enhanced by deep learning technologies. These models incorporate context-aware functions and real-time semantic analysis, significantly improving the adaptability of speech systems (Guo et al., 2021; Tyagi and Szénási, 2024).

Future designs for speech interaction can further optimize user experience by integrating multimodal technologies, such as speech combined with touchscreens or gestures. For instance, speech systems can dynamically adjust interaction strategies based on real-time detection of drivers' intentions and operational needs, providing smarter and more personalized services (Farooq et al., 2019; Kaplan, 2009). By enhancing the precision, adaptability, and multilingual support of speech recognition, speech interaction is expected to become an increasingly efficient and safe core technology in future human-vehicle interaction systems (Fumelli et al., 2024).

3.2 RQ2 What are the key UX factors?

3.2.1 Extraction of user experience factors

To answer the second research question, we standardized the various terms related to UX used in the 23 studies to ensure consistency. The factors related to UX mentioned in each paper were obtained through extraction (see Table 2).

|

Interface |

Type

of interaction |

Author

(Year) |

UX

factors |

|

GUI |

Physical button |

Jung et al. (2021) |

Efficiency, Usability, Recognizability |

|

Tan et al. (2022) |

Usability, Efficiency, Learnability |

||

|

Zhong et al. (2022) |

Usability, Safety |

||

|

Huo et al. (2024) |

Responsiveness, Usability |

||

|

Yi et al. (2024) |

Usability, Safety |

||

|

Detjen et al. (2021) |

Responsiveness, Recognizability |

||

|

Touch screen |

Jung et al. (2021) |

Learnability, Usability, Entertainment, Safety |

|

|

Murali (2022) |

Responsiveness, Usability |

||

|

Huo et al. (2024) |

Usability, Responsiveness |

||

|

Nagy et al. (2023) |

Cognitive load, Efficiency |

||

|

Kim et al. (2014) |

Usability, Safety, Responsiveness |

||

|

Čegovnik

et al. (2020) |

Responsiveness, Cognitive load, Stimulation |

||

|

Zhang et al. (2023) |

Usability, Safety |

||

|

Farooq et al. (2019) |

Usability, Responsiveness, Safety, Efficiency |

||

|

Zhong et al. (2022) |

Usability, Safety |

||

|

Detjen et al. (2021) |

Safety |

||

|

Gesture |

Tan et al. (2022) |

Error rate, Usability |

|

|

Čegovnik

et al. (2020) |

Error rate, Usability, Cognitive load, Efficiency |

||

|

Zhang et al. (2023) |

Safety, Novelty |

||

|

Bilius and Vatavu

(2020) |

Cognitive load, Novelty, Usability, Efficiency |

||

|

Zhang et al. (2022) |

Error rate, Cognitive load, Safety |

||

|

Graichen and |

Trust,

Usability, Novelty, Stimulation |

||

|

Detjen et al. (2021) |

Efficiency |

||

|

CUI |

Speech |

Jakus et al. (2015) |

Efficiency, Novelty |

|

Tan et al. (2022) |

Responsiveness, Usability, Accuracy of recognition |

||

|

Ruijten et al. (2018) |

Naturalness, Anthropomorphism, Trust |

||

|

Deng et al. (2024) |

Accuracy of recognition, Trust |

||

|

Xie et al. (2024) |

Responsiveness, Usability |

||

|

Murali et al. (2022) |

Entertainment, Naturalness, Safety |

||

|

Banerjee et al. (2020) |

Accuracy of recognition, Safety |

||

|

Johnson (2021) |

Accuracy of recognition, Safety, Personalization, |

||

|

Ruijten et al. (2018) |

Anthropomorphism, Trust |

||

|

Detjen et al. (2021) |

Naturalness, Anthropomorphism, Accuracy of recognition |

3.2.2 Key user experience factors and analysis

After summarized the UX factors in the literature above, we further extracted the UX factors that are commonly mentioned in the interfaces of GUI and CUI, and we regarded two or more factors used in the literature as important and summarized them, (see Table 3) in which Usability appeared 17 times in GUI, Efficiency appeared 6 times, Learnability appeared 2 times, the Cognitive load appeared 5 times, Responsiveness appeared 7 times, and Safety appeared 11 times; in CUI Naturalness appeared 3 times, Efficiency appeared 2 times, Responsiveness appeared 2 times, Usability appeared 3 times, Accuracy of Recognition appears 5 times, Trust appears 3 times, Safety appears 3 times, and Anthropomorphism appears 3 times. The key point UX factors as follows:

|

Interface |

Key UX factors |

Consideration |

|

GUI |

Usability |

Driver can use and interact

with the system easily, effectively and conveniently |

|

Efficiency |

Ability to present critical information and enable task completion

quickly |

|

|

Learnability |

Driver can understand and master the visual system's

functionality, ensuring |

|

|

Cognitive load |

Mental effort required by

drivers to process and understand information |

|

|

Responsiveness |

Ability to provide immediate and accurate feedback to user inputs,

ensuring |

|

|

Safety |

Ability to minimize driver distraction and support safe driving

behaviors by |

|

|

CUI |

Naturalness |

Ability to enable intuitive communication that aligns with natural

speech |

|

Efficiency |

Quickly and accurately process user inputs and deliver the desired

outcomes, minimizing the driver's effort and time required for interaction |

|

|

Responsiveness |

Ability to provide timely, contextually relevant, and seamless

feedback to |

|

|

Usability |

The construction of voice commands needs to cover rich scenarios

to |

|

|

Accuracy of recognition |

Ability to correctly interpret and process user commands or speech

inputs without errors |

|

|

Trust |

The conversational system's reliability, performance, and ability

to provide accurate and relevant results during interaction |

|

|

Safety |

Interaction through dialog to support safe vehicle operation

ensures that |

|

|

Anthropomorphism |

The extent to which the system mimics human-like qualities like

the tone, |

Through a review of the literature, the key factors for GUI were identified as usability, efficiency, learnability, responsiveness, cognitive load, and safety. In the context of iHMI systems, usability is a critical factor for drivers' acceptance of in-vehicle technology (Stevens and Burnett, 2014). Most studies emphasize the usability of various technologies and functionalities in visual interfaces. Usability evaluations often rely on standardized tools such as the System Usability Scale (SUS), which provides a subjective and quantitative assessment of users' overall experience in terms of ease of use, learnability, and operational satisfaction with the interface (Brooke, 1996). Additionally, studies have demonstrated a direct relationship between improved usability and driving safety. In particular, a well-designed interface layout can significantly reduce the distraction caused by interacting with the interface, especially in complex driving environments (Li et al., 2017).

Efficiency is another critical factor in human-vehicle interaction. Its core goal is to ensure that drivers can complete tasks with minimal time and cognitive effort. This principle is reflected in the continuous iteration and optimization of interaction methods, evolving from physical buttons to touchscreens and gesture-based interactions, aiming to enhance system responsiveness and information transmission efficiency. For instance, the efficiency of touch-based interactions depends on the consistency of layout and logical arrangement of buttons in UI design, while gesture-based interactions focus on minimizing operational steps and reducing the risk of accidental triggers (Zhang et al., 2023). Fast and accurate interactions enable drivers to complete necessary tasks in the shortest possible time, reducing attention diversion and enhancing the overall driving experience.

As smart vehicle technologies advance, human-vehicle interaction functionalities are becoming increasingly complex, which imposes higher demands on the learnability of user interfaces. Learnability, defined as the ease with which users can master new interfaces and technological features, is regarded as a key factor in user acceptance of new systems (Noel et al., 2005). In multimodal interaction contexts, such as the integration of gestures and voice, interfaces must simplify operational logic and provide clear feedback to reduce the initial learning cost (Schmidt et al., 2010). Furthermore, incorporating step-by-step guidance and intelligent hints into interface design has been shown to significantly enhance learnability (Li and Sun, 2021).

With regard to cognitive load, it refers to minimizing the mental effort required by users to process information in iHMI visual interface design. Interfaces with high cognitive load can lead to driver distraction, delayed information processing, or operational errors, thereby increasing the risk of traffic accidents (Strayer, 2015). Constantine et al. emphasized in their study that in designing in-vehicle visual interfaces, prioritizing the simplification of interaction processes over complex workflows is essential for improving user recognition, interpretation, and task completion speed while reducing driver distraction and cognitive load (Constantine and Windl, 2009).

Responsiveness, defined as the system's timely feedback to user inputs, is another critical factor influencing driving experience and user satisfaction. Delays in system feedback can increase feelings of frustration, anger, and agitation while reducing satisfaction (Szameitat et al., 2009). To enhance safety and user experience, unobtrusive and function-specific feedback methods have been proposed to communicate system uncertainty and encourage appropriate use (Kunze et al., 2017). Therefore, timely and accurate feedback plays a vital role in maintaining user trust, ensuring safe driving performance, and optimizing the overall driving experience.

The increasing complexity of automotive user interfaces poses challenges to driver safety due to potential distractions and information overload (Kern and Schmidt, 2009). To address this core safety concern, researchers have proposed multimodal interaction technologies that support attention switching between the road and in-vehicle systems while minimizing visual distractions (Pfleging et al., 2012). Driver-based interface design spaces have been developed to analyze and compare different UI configurations, potentially improving interaction methods (Kern and Schmidt, 2009). Green (2008) introduced established standards and guidelines for evaluating driver interfaces, such as Society of Automotive Engineers (SAE) and International Organization for Standardization (ISO), to minimize distractions and information overload. The aforementioned usability factors are relevant across the three types of interaction methods. However, some usability factors are specific to a single interaction method. For instance, button recognizability pertains to physical buttons, entertainment value to touchscreen interaction, and novelty to gesture interaction. These usability factors are considered essential for evaluating the usability of each interaction method but are not classified as key factors for GUI as a whole.

The key UX factors obtained at CUI through literature collation include naturalness, efficiency, responsiveness, usability, accuracy of recognition, trust, safety, and anthropomorphism. Naturalness is a core factor for conversational voice interfaces, referring to the intuitive communication enabled by interfaces that align with natural language patterns and behaviors. This natural interaction lowers the barrier to using the system by eliminating the need for users to learn complex voice command formats. Studies have shown that improving natural language processing capabilities significantly enhances the user acceptance of CUI (M et al., 2023). Rosekind et al. highlighted that active participation in dialogue is more effective than passive listening in maintaining driver alertness (Rosekind et al., 1997). Hence, the design of CUIs should prioritize conversational naturalness over rigid command-based interactions.

Efficiency is a critical attribute of iHMI systems, referring to the system's ability to process voice inputs quickly and accurately while providing timely feedback. This minimizes the driver's workload and interaction time. In driving contexts, the recognition and processing of user commands by conversational voice systems directly impact user satisfaction and interaction frequency. Moreover, compared to traditional manual systems, voice systems have been proven to significantly reduce cognitive distraction and enhance driving safety (Carter and Graham, 2000).

Responsiveness is a critical factor for the success of CUI (Conversational User Interfaces), emphasizing the system's ability to provide timely and contextually relevant feedback in response to user queries or commands. High responsiveness not only enhances user experience but also reduces operational anxiety caused by waiting because delays may require participants to engage in interaction management (Danilava et al., 2013), thereby increasing risks and insecurity during the driving process. In conversational agent interactions, unnaturally long delays may be perceived as errors, while excessively fast responses may come across as rude (Funk et al., 2020). Therefore, CUI design must carefully balance engaging dialogue with effective, timely responses to improve usability and acceptability in automotive environments.

Usability reflects the comprehensiveness of CUI design, ensuring that voice commands can accommodate a wide range of scenarios and that the system delivers smooth interaction capabilities. Highly usable systems must not only be easy to learn and use but also support diverse functionalities, such as multilingual adaptation or voice operations in complex scenarios. With the widespread application of large language models (LLMs) in CUI, particularly in speech recognition and natural language understanding, studies indicate that improving the recognition of non-standard language inputs, adapting to noisy environments (Sokol et al., 2017), and providing personalized, context-aware interactions (Lin et al., 2018) can significantly enhance the usability of human-vehicle systems.

Accuracy of recognition is one of the fundamental performance metrics of voice interfaces, determining whether user speech inputs can be correctly identified and understood by the system. This encompasses not only the recognition of speech content but also the ability to interpret intonation, dialects, and speech in noisy environments. Notably, emotional content in speech provides additional information about the speaker's psychological state (Elkins and Derrick, 2013). For instance (Krajewski et al., 2008), demonstrated that fatigue states could be detected through acoustic features of speech, which greatly contributes to improving safety during driving.

Trust is a decisive factor in shaping how individuals interact with technology (Hoff and Bashir, 2015). For intelligent vehicle CUIs, the level of trust a driver has in the system is regarded as a critical determinant of efficiency and safety in highly automated driving (Ekman et al., 2018). Trust in CUIs is influenced by the system's anthropomorphism, perceived controllability, and intelligence (Ruijten et al., 2018). However, excessive trust in the system can lead to misuse of automation. For example, if drivers overly rely on voice feedback, they may fail to make correct judgments and timely responses in unexpected driving situations.

Safety is a core element of concern for CUI. Distraction during driving is one of the primary causes of traffic accidents, and the prevalence of traditional in-car technologies that rely on visual interfaces has raised concerns about driver distraction and safety (Rakotonirainy, 2003; Stevens, 2000). The most significant feature of CUI is its ability to reduce the need for visual and manual operation. By allowing drivers to keep their hands on the steering wheel and their eyes on the road, CUIs substantially decrease driver distraction and visual cognitive load, thereby enhancing driving safety (Huang and Huang, 2018).

Anthropomorphism is often defined as the degree to which human-like characteristics are attributed to non-human agents (Bartneck et al., 2009). Compared to graphical interfaces, anthropomorphic CUIs that mimic human behaviors and apply conversational principles have been proven to enhance trust, likability, and perceived intelligence (Ruijten et al., 2018). In a study by Waytz et al. (2014), the effects of anthropomorphism on trust and likability were examined, revealing that when a car was anthropomorphized (e.g., assigned a name, gender, and voice), people tended to like and trust it more. However, for CUI systems, achieving trust through human-like traits requires more than a superficial resemblance. It is more critical for automated systems to demonstrate human-level understanding, operation, and feedback behaviors during communication than to merely exhibit human-like appearances.

4.1 Discussion on research question 1

In addressing RQ1, the study found that the existing literature primarily categorizes GUI into three types: physical buttons, touch screens, and gesture, while CUI mainly focuses on speech-based interaction. As a traditional visual-driven interaction method, GUI excels in improving drivers' cognitive efficiency and operational accuracy due to its rich information and intuitive design. However, GUI also faces challenges such as high cognitive load and the potential to distract drivers in dynamic driving environments. In contrast, CUI enables hands-free operation through voice commands, significantly reducing drivers' cognitive load and visual distraction. However, its performance is still constrained by the accuracy of voice recognition technologies and interference from environmental noise. Gesture-based interaction, as a complement to GUI, provides a more natural and intuitive interaction method. Nonetheless, its accuracy and reliability are limited by the current state of sensor technology. In particular, the error rate of gesture recognition remains a challenge in complex in-vehicle environments and requires further improvement.

4.2 Discussion on research question 2

In addressing RQ2, the study identified key user experience (UX) factors for GUI and CUI. For GUI, the main UX factors include usability, efficiency, learnability, responsiveness, cognitive load, and safety. These factors highlight the need for interface design to balance ease of operation with information presentation to ensure driving safety and operational efficiency. For example, physical buttons have advantages in emergency operations due to their intuitiveness and low learning cost, while touch screens enhance user experience through high customizability and multitasking management. However, attention should be paid to reducing visual distraction to maintain driving safety. For CUI, the critical UX factors include naturalness, efficiency, responsiveness, usability, recognition accuracy, trust, safety, and anthropomorphism. Natural and anthropomorphic designs can enhance users' trust and acceptance of the system, while high efficiency and responsiveness ensure smooth and timely voice interactions. Recognition accuracy directly affects the user's operational experience and the reliability of the system, while safety is improved by reducing the need for visual and manual operations, thereby enhancing overall driving safety.

4.3 Limitations and future directions

Despite the comprehensive analysis of the existing literature through systematic review, this study has certain limitations. First, the literature screening process focused only on the primary interaction methods of GUI and CUI, potentially overlooking other interaction methods in human-vehicle interaction systems, such as eye-tracking interactions. Second, the research primarily concentrated on technical analyses, lacking empirical studies on actual user behavior and long-term usage experiences. Future research can incorporate both quantitative and qualitative methods to further validate and expand the findings of this study. Additionally, as artificial intelligence and big data technologies continue to evolve, exploring intelligent, personalized iHMI designs and the integration of multimodal interactions will be important directions for future research.

This study reviewed relevant literature in the iHMI field, analyzing the user experience (UX) factors of graphical user interfaces (GUI) and conversational user interfaces (CUI) in in-vehicle human-machine interfaces. It revealed the application status and critical influencing factors of GUI and CUI in modern smart cars. By gaining a deeper understanding of these UX factors, designers can more effectively optimize iHMI systems to enhance driving experience and safety. Future research should further explore the integrated application of multimodal interaction methods and the potential of intelligent technologies in iHMI to drive the continuous development of human-machine interaction technology in smart cars.

References

1. Bae, S.Y., Cha, M.C., Yoon, S.H. and Lee, S.C., Investigation of Touch Button Size and Touch Screen Position of IVIS in a Driving Context. Journal of the Ergonomics Society of Korea, 42(1), 39-55, 2023. doi.org/10.5143/JESK.2023.42.1.39

Google Scholar

2. Banerjee, A., Maity, S.S., Banerjee, W., Saha, S. and Bhattacharyya, T., Facial and voice recognition-based security and safety system in cars, 2020 8th International Conference on Reliability, Infocom Technologies and Optimization (Trends and Future Directions), 812-814, 2020. https://doi.org/10.1109/ICRITO48877.2020.9197886

Google Scholar

3. Bartneck, C., Kulić, D., Croft, E. and Zoghbi, S., Measurement Instruments for the Anthropomorphism, Animacy, Likeability, Perceived Intelligence, and Perceived Safety of Robots. International Journal of Social Robotics, 1(1), 71-81, 2009. doi.org/10.1007/s12369-008-0001-3

Google Scholar

4. Bastola, A., Brinkley, J., Wang, H. and Razi, A., Driving Towards Inclusion: Revisiting In-Vehicle Interaction in Autonomous Vehicles (arXiv:2401.14571). arXiv., 2024. doi.org/10.48550/arXiv.2401.14571

Google Scholar

5. Beringer, D.B. and Maxwell, S.R., The Use of Touch-Sensitive Human-Computer Interfaces: Behavioral and Design Implications. Proceedings of the Human Factors Society Annual Meeting, 26(5), 435-435, 1982. doi.org/10.1177/154193128202600510

Google Scholar

6. Berman, S. and Stern, H., Sensors for Gesture Recognition Systems. IEEE Transactions on Systems, Man, and Cybernetics, Part C (Applications and Reviews), 42(3), 277-290, 2012. doi.org/10.1109/TSMCC.2011.2161077

Google Scholar

7. Bilius, L.B. and Vatavu, R.D., A multistudy investigation of drivers and passengers' gesture and voice input preferences for in-vehicle interactions, Journal of Intelligent Transportation Systems, 25(2), 197-220, 2020. https://doi.org/10.1080/15472450.2020.1846127

Google Scholar

8. Brooke, J., SUS: A 'Quick and Dirty' Usability Scale. In Usability Evaluation In Industry. CRC Press. 1996.

Google Scholar

9. Brouet, R., Multi-touch gesture interactions and deformable geometry for 3D edition on touch screen [Ph.D. thesis, Université de Grenoble, France], 2015. https://hal.science/tel-01517102 (retrieved December 3, 2024).

Google Scholar

10. Carter, C. and Graham, R., Experimental Comparison of Manual and Voice Controls for the Operation of in-Vehicle Systems. Proceedings of the Human Factors and Ergonomics Society Annual Meeting, 44(20), 3-286, 2000. doi.org/10.1177/ 154193120004402016

Google Scholar

11. Čegovnik, T. and Sodnik, J., Free-hand human-machine interaction in vehicles, 2016.

12. Čegovnik, T., Stojmenova, K., Tartalja, I. and Sodnik, J., Evaluation of different interface designs for human-machine interaction in vehicles, Multimedia Tools and Applications, 79(29), 21361-21388, 2020. https://doi.org/10.1007/s11042-020-08920-8

Google Scholar

13. Chen, X., Dong, X. and Yu, Y., Human-Computer Interaction Design of Mobile UI Interface for Autonomous Intelligent Vehicles. Computer-Aided Design and Applications, 94-108, 2024. doi.org/10.14733/cadaps.2025.S7.94-108

Google Scholar

14. Choe, M., Bosch, E., Dong, J., Alvarez, I., Oehl, M., Jallais, C., Alsaid, A., Nadri, C. and Jeon, M., Emotion GaRage Vol. IV: Creating Empathic In-Vehicle Interfaces with Generative AIs for Automated Vehicle Contexts. Adjunct Proceedings of the 15th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, 234-236, 2023. doi.org/10.1145/3581961.3609828

Google Scholar

15. Constantine, L. and Windl, H., Safety, speed, and style: Interaction design of an in-vehicle user interface. CHI '09 Extended Abstracts on Human Factors in Computing Systems, 2675-2678, 2009. doi.org/10.1145/1520340.1520383

Google Scholar

16. Danilava, S., Busemann, S., Schommer, C. and Ziegler, G., Why are you Silent? - Towards Responsiveness in Chatbots. Avec Le Temps! Time, Tempo, and Turns in Human-Computer Interaction". Workshop at CHI 2013, Paris, France. https://orbilu.uni.lu/ handle/10993/16765 (retrieved December 3, 2024).

Google Scholar

17. DanNuo, J., Xin, H., JingHan, X. and Ling, W., Design of Intelligent Vehicle Multimedia Human-Computer Interaction System. IOP Conference Series: Materials Science and Engineering, 563(5), 052029, 2019. doi.org/10.1088/1757-899X/563/5/052029

Google Scholar

18. Deng, M., Chen, J., Wu, Y., Ma, S., Li, H., Yang, Z. and Shen, Y., Using voice recognition to measure trust during interactions with automated vehicles, Applied Ergonomics, 116, 104184, 2024. https://doi.org/10.1016/j.apergo.2023.104184

Google Scholar

19. Detjen, H., Faltaous, S., Pfleging, B., Geisler, S. and Schneegass, S., How to increase automated vehicles' acceptance through in-vehicle interaction design: A review, International Journal of Human-Computer Interaction, 37(4), 308-330, 2021. https://doi.org/ 10.1080/10447318.2020.1860517

Google Scholar

20. D'Eusanio, A., Simoni, A., Pini, S., Borghi, G., Vezzani, R. and Cucchiara, R., Multimodal Hand Gesture Classification for the Human-Car Interaction. Informatics, 7(3), 2020. doi.org/10.3390/informatics7030031

Google Scholar

21. Duolikun, W., Wang, B., Yang, X., Niu, H., Wan, X., Xue, Q. and Zhao, Y., Effect of Secondary Tasks in Touchscreen In-Vehicle Information System Operation on Driving Distraction. 14th International Conference on Applied Human Factors and Ergonomics (AHFE 2023). doi.org/10.54941/ahfe1003790

Google Scholar

22. Ekman, F., Johansson, M. and Sochor, J., Creating Appropriate Trust in Automated Vehicle Systems: A Framework for HMI Design. IEEE Transactions on Human-Machine Systems, 48(1), 95-101, 2018. doi.org/10.1109/THMS.2017.2776209

Google Scholar

23. Elkins, A.C. and Derrick, D.C., The Sound of Trust: Voice as a Measurement of Trust During Interactions with Embodied Conversational Agents. Group Decision and Negotiation, 22(5), 897-913, 2013. doi.org/10.1007/s10726-012-9339-x

Google Scholar

24. Farooq, A., Evreinov, G. and Raisamo, R., Reducing driver distraction by improving secondary task performance through multimodal touchscreen interaction. SN Applied Sciences, 1(8), 905, 2019. doi.org/10.1007/s42452-019-0923-4

Google Scholar

25. Ferris, T.K., Suh, Y., Miles, J.D. and Texas A&M Transportation Institute., Investigating the roles of touchscreen and physical control interface characteristics on driver distraction and multitasking performance. 2016. https://rosap.ntl.bts.gov/view/dot/30788 (retrieved December 3, 2024).

Google Scholar

26. Fumelli, C., Dutta, A. and Kaboli, M., Advancements in Tactile Hand Gesture Recognition for Enhanced Human-Machine Interaction (arXiv:2405.17038). arXiv, 2024. doi.org/10.48550/arXiv.2405.17038

Google Scholar

27. Funk, M., Cunningham, C., Kanver, D., Saikalis, C. and Pansare, R., Usable and Acceptable Response Delays of Conversational Agents in Automotive User Interfaces. 12th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, 262-269, 2020. doi.org/10.1145/3409120.3410651

Google Scholar

28. Gao, F., Ge, X., Li, J., Fan, Y., Li, Y. and Zhao, R., Intelligent Cockpits for Connected Vehicles: Taxonomy, Architecture, Interaction Technologies, and Future Directions. Sensors, 24(16), 2024. doi.org/10.3390/s24165172

Google Scholar

29. Garikapati, D. and Shetiya, S.S., Autonomous Vehicles: Evolution of Artificial Intelligence and the Current Industry Landscape. Big Data and Cognitive Computing, 8(4), 2024. doi.org/10.3390/bdcc8040042

Google Scholar

30. Graichen, L. and Graichen, M., Do users desire gestures for in-vehicle interaction? Towards the subjective assessment of gestures in a high-fidelity driving simulator, Computers in Human Behavior, 156, 108189, 2024. https://doi.org/10.1016/j.chb.2024.108189

Google Scholar

31. Green, P., Driver Interface/HMI Standards to Minimize Driver Distraction/Overload (SAE Technical Paper 2008-21-0002). SAE International. https://www.sae.org/publications/technical-papers/content/2008-21-0002/ (retrieved December 3, 2024).

Google Scholar

32. Guo, D., Zhang, Z., Fan, P., Zhang, J. and Yang, B., A Context-Aware Language Model to Improve the Speech Recognition in Air Traffic Control. Aerospace, 8(11), 2021. doi.org/10.3390/aerospace8110348

Google Scholar

33. Harrison, C. and Hudson, S.E., Providing dynamically changeable physical buttons on a visual display. Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, 299-308, 2009. doi.org/10.1145/1518701.1518749

Google Scholar

34. Hoff, K.A. and Bashir, M., Trust in Automation: Integrating Empirical Evidence on Factors That Influence Trust. Human Factors, 57(3), 407-434, 2015. doi.org/10.1177/0018720814547570

Google Scholar

35. Huang, Z. and Huang, X., A study on the application of voice interaction in automotive human machine interface experience design. AIP Conference Proceedings, 1955(1), 040074, 2018. doi.org/10.1063/1.5033738

Google Scholar

36. Huo, F., Wang, T., Fang, F. and Sun, C., The influence of tactile feedback in in-vehicle central control interfaces on driver emotions: A comparative study of touchscreens and physical buttons, International Journal of Industrial Ergonomics, 101, 103586, 2024. https://doi.org/10.1016/j.ergon.2024.103586

Google Scholar

37. Jakus, G., Dicke, C. and Sodnik, J., A user study of auditory, head-up and multi-modal displays in vehicles. Applied Ergonomics, 46, 184-192, 2015. doi.org/10.1016/j.apergo.2014.08.008

Google Scholar

38. Jansen, P., Colley, M. and Rukzio, E., A Design Space for Human Sensor and Actuator Focused In-Vehicle Interaction Based on a Systematic Literature Review. Proceedings of the ACM Interact. Mobile Wearable Ubiquitous Technologies, 6(2), 1-51, 2022. doi.org/10.1145/3534617

Google Scholar

39. Jianan, L. and Abas, A., Development of Human-Computer Interactive Interface for Intelligent Automotive. International Journal of Artificial Intelligence, 7(2), 2020. doi.org/10.36079/lamintang.ijai-0702.134

Google Scholar

40. Johnson, S., Enhancing context-aware voice recognition systems for improved user experience in cars, International Journal of Engineering, 6(12), 2021.

Google Scholar

41. Jonsson, I.M. and Dahlbäck, N., Driving with a Speech Interaction System: Effect of Personality on Performance and Attitude of Driver. In M. Kurosu (Ed.), Human-Computer Interaction. Advanced Interaction Modalities and Techniques (pp. 417-428), 2014. doi.org/10.1007/978-3-319-07230-2_40

Google Scholar

42. Jung, S., Park, J., Park, J., Choe, M., Kim, T., Choi, M. and Lee, S., Effect of Touch Button Interface on In-Vehicle Information Systems Usability, International Journal of Human-Computer Interaction, 37(15), 1404-1422, 2021. https://doi.org/10.1080/ 10447318.2021.1886484

Google Scholar

43. Kaplan, F., Are gesture-based interfaces the future of human computer interaction? Proceedings of the 2009 International Conference on Multimodal Interfaces, 239-240, 2009. doi.org/10.1145/1647314.1647365

Google Scholar

44. Kareem Murad, B. and H. Hassin Alasadi, A., Advancements and Challenges in Hand Gesture Recognition: A Comprehensive Review. Iraqi Journal for Electrical and Electronic Engineering, 20(2), 154-164, 2024. doi.org/10.37917/ijeee.20.2.13

Google Scholar

45. Kern, D. and Schmidt, A., Design space for driver-based automotive user interfaces. Proceedings of the 1st International Conference on Automotive User Interfaces and Interactive Vehicular Applications, 3-10, 2009. doi.org/10.1145/1620509.1620511

Google Scholar

46. Kim, H., Kwon, S., Heo, J., Lee, H. and Chung, M.K., The effect of touch-key size on the usability of in-vehicle information systems and driving safety during simulated driving, Applied Ergonomics, 45(3), 379-388, 2014. https://doi.org/10.1016/j.apergo.2013.05.006

Google Scholar

47. Kim, J., Ryu, J.H. and Han, T.M., Multimodal Interface Based on Novel HMI UI/UX for In-Vehicle Infotainment System, Electronics and Telecommunications Research Institute Journal, 37(4), 793-803, 2015. https://doi.org/10.4218/etrij.15.0114.0076

Google Scholar

48. Krajewski, J., Wieland, R. and Batliner, A., An Acoustic Framework for Detecting Fatigue in Speech Based Human-Computer-Interaction. In K. Miesenberger, J. Klaus, W. Zagler, and A. Karshmer (Eds.), Computers Helping People with Special Needs (pp. 54-61), 2008. doi.org/10.1007/978-3-540-70540-6_7

Google Scholar

49. Kunze, A., Summerskill, S.J., Marshall, R. and Filtness, A.J., Enhancing Driving Safety and User Experience Through Unobtrusive and Function-Specific Feedback. Proceedings of the 9th International Conference on Automotive User Interfaces and Interactive Vehicular Applications Adjunct, 183-189, 2017. doi.org/10.1145/3131726.3131762

Google Scholar

50. Large, D.R., Burnett, G. and Clark, L., Lessons from Oz: Design guidelines for automotive conversational user interfaces. Proceedings of the 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications: Adjunct Proceedings, 335-340, 2019. doi.org/10.1145/3349263.3351314

Google Scholar

51. Large, D.R., Burnett, G., Crundall, E., Lawson, G., Skrypchuk, L. and Mouzakitis, A., Evaluating secondary input devices to support an automotive touchscreen HMI: A cross-cultural simulator study conducted in the UK and China. Applied Ergonomics, 78, 184-196, 2019. doi.org/10.1016/j.apergo.2019.03.005

Google Scholar

52. Lee, S.H. and Yoon, S.O., User interface for in-vehicle systems with on-wheel finger spreading gestures and head-up displays. Journal of Computational Design and Engineering, 7(6), 700-721, 2020. doi.org/10.1093/jcde/qwaa052

Google Scholar

53. Lee, S.H., Yoon, S.O. and Shin, J.H., On-wheel finger gesture control for in-vehicle systems on central consoles. Adjunct Proceedings of the 7th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, 94-99, 2015. doi.org/ 10.1145/2809730.2809739

Google Scholar

54. Lee, S. and Zhai, S., The performance of touch screen soft buttons. Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, 309-318, 2009. doi.org/10.1145/1518701.1518750

Google Scholar

55. Leftheriotis, I. and Chorianopoulos, K., User experience quality in multi-touch tasks. Proceedings of the 3rd ACM SIGCHI Symposium on Engineering Interactive Computing Systems, 277-282, 2011. doi.org/10.1145/1996461.1996536

Google Scholar

56. Li, G. and Sun, Y., "How Do I Use This Car?": The Learnability of Interactive Design for Intelligent Connected Vehicles. In F. Rebelo (Ed.), Advances in Ergonomics in Design (pp. 223-230), 2021. doi.org/10.1007/978-3-030-79760-7_27

Google Scholar

57. Li, Q., Wang, J. and Luo, T., A Comparative Study of In-Car HMI Interaction Modes Based on User Experience. In C. Stephanidis, M. Antona, and S. Ntoa (Eds.), HCI International 2021—Posters (pp. 257-261), 2021. doi.org/10.1007/978-3-030-78645-8_32

Google Scholar

58. Li, R., Chen, Y.V., Sha, C. and Lu, Z., Effects of interface layout on the usability of In-Vehicle Information Systems and driving safety. Displays, 49, 124-132, 2017. doi.org/10.1016/j.displa.2017.07.008

Google Scholar

59. Li, S., Zhou, L., Fan, M. and Xiong, Y., A comprehensive analysis of gesture recognition systems: Advancements, challenges, and future direct. ResearchGate, 2024. https://www.researchgate.net/publication/382370043_A_comprehensive_analysis_of_gesture_ recognition_systems_Advancements_challenges_and_future_direct (retrieved December 3, 2024).

60. Lin, S.C., Hsu, C.H., Talamonti, W., Zhang, Y., Oney, S., Mars, J. and Tang, L., Adasa: A Conversational In-Vehicle Digital Assistant for Advanced Driver Assistance Features. Proceedings of the 31st Annual ACM Symposium on User Interface Software and Technology, 531-542, 2018. doi.org/10.1145/3242587.3242593

Google Scholar

61. M, M.J., J, S., T, J., T, N., T, K. and N, A., Advancements in Human-Machine Interaction: Exploring Natural Language Processing and Gesture Recognition for Intuitive User Interfaces. 2023 International Conference on Sustainable Emerging Innovations in Engineering and Technology (ICSEIET), 201-205, 2023. doi.org/10.1109/ICSEIET58677.2023.10303031

Google Scholar

62. Mandujano-Granillo, J.A., Candela-Leal, M.O., Ortiz-Vazquez, J.J., Ramirez-Moreno, M.A., Tudon-Martinez, J.C., Felix-Herran, L.C., Galvan-Galvan, A. and Lozoya-Santos, J.D.J., Human-Machine Interfaces: A Review for Autonomous Electric Vehicles. IEEE Access, 12, 121635-121658, 2024. doi.org/10.1109/ACCESS.2024.3450439

Google Scholar

63. Merenda, C., Kim, H., Tanous, K., Gabbard, J.L., Feichtl, B., Misu, T. and Suga, C., Augmented Reality Interface Design Approaches for Goal-directed and Stimulus-driven Driving Tasks. IEEE Transactions on Visualization and Computer Graphics, 24(11), 2875-2885, 2018. doi.org/10.1109/TVCG.2018.2868531

Google Scholar

64. Moher, D., Liberati, A., Tetzlaff, J., Altman, D.G. and the PRISMA Group., Preferred Reporting Items for Systematic Reviews and Meta-Analyses: The PRISMA Statement. Annals of Internal Medicine, 151(4), 264-269, 2009. doi.org/10.7326/0003-4819-151-4-200908180-00135

Google Scholar

65. Morvaridi Farimani, H., Investigating the User Experience of Physical and Digital Interfaces in Automotive Design. 2020. http://hdl.handle.net/20.500.12380/309002 (retrieved December 3, 2024).

Google Scholar

66. Murali, P.K., Kaboli, M. and Dahiya, R., Intelligent In-Vehicle Interaction Technologies. Advanced Intelligent Systems, 4(2), 2100122, 2022. doi.org/10.1002/aisy.202100122

Google Scholar

67. Nagy, V., Kovács, G., Földesi, P., Kurhan, D., Sysyn, M., Szalai, S. and Fischer, S., Testing road vehicle user interfaces concerning the driver's cognitive load, Infrastructures, 8(3), 2023. https://doi.org/10.3390/infrastructures8030049

Google Scholar

68. Noel, E., Nonnecke, B. and Trick, L., A Comprehensive Learnability Evaluation Method for In-Car Navigation Devices (SAE Technical Paper 2005-01-1605). SAE International. 2005. doi.org/10.4271/2005-01-1605

Google Scholar

69. Norman, D.A., The Design of Everyday Things, MIT Press, 2013.

70. Pfleging, B., Kern, D., Döring, T. and Schmidt, A., Reducing Non-Primary Task Distraction in Cars Through Multi-Modal Interaction, 54(4), 179-187, 2012. doi.org/10.1524/itit.2012.0679

Google Scholar

71. Politis, I., Langdon, P., Adebayo, D., Bradley, M., Clarkson, P.J., Skrypchuk, L., Mouzakitis, A., Eriksson, A., Brown, J.W.H., Revell, K. and Stanton, N., An Evaluation of Inclusive Dialogue-Based Interfaces for the Takeover of Control in Autonomous Cars. Proceedings of the 23rd International Conference on Intelligent User Interfaces, 601-606, 2018. doi.org/10.1145/3172944.3172990

Google Scholar

72. Rakotonirainy, A., Human-Computer Interactions: Research Challenges for In-vehicle Technology. Proceedings of Road Safety Research Policing and Education Conference September, 2003.

Google Scholar

73. Rosekind, M.R., Gander, P.H., Gregory, K.B., Smith, R.M., Miller, D.L., Oyung, R., Webbon, L.L. and Johnson, J.M., Managing Fatigue in Operational Settings 1: Physiological Considerations and Counter-measures. Hospital Topics, 1997. https://www.tandfonline.com/ doi/abs/10.1080/00185868.1997.10543761 (retrieved December 3, 2024).

Google Scholar

74. Ruijten, P.A.M., Terken, J.M.B. and Chandramouli, S.N., Enhancing Trust in Autonomous Vehicles through Intelligent User Interfaces That Mimic Human Behavior. Multimodal Technologies and Interaction, 2(4), 2018. doi.org/10.3390/mti2040062

Google Scholar

75. Schmidt, A., Dey, A.K., Kun, A.L. and Spiessl, W., Automotive user interfaces: Human computer interaction in the car. CHI '10 Extended Abstracts on Human Factors in Computing Systems, 3177-3180, 2010. doi.org/10.1145/1753846.1753949

Google Scholar

76. Sharma, S., Bhadula, S. and Juyal, A., Augmented Reality for Next Generation Connected and Automated Vehicles. 2024 IEEE International Conference on Computing, Power and Communication Technologies (IC2PCT), 5, 23-28, 2024. doi.org/10.1109/ IC2PCT60090.2024.10486694

Google Scholar

77. Sokol, N., Chen, E.Y. and Donmez, B., Voice-Controlled In-Vehicle Systems: Effects of Voice-Recognition Accuracy in the Presence of Background Noise. Driving Assessment Conference, 9(2017), 2017. doi.org/10.17077/drivingassessment.1629

Google Scholar

78. Stevens, A., Safety of driver interaction with in-vehicle information systems. Proceedings of the Institution of Mechanical Engineers, Part D: Journal of Automobile Engineering, 214(6), 639-644, 2000. doi.org/10.1243/0954407001527501

Google Scholar

79. Stevens, A. and Burnett, G., Designing In-Vehicle Technology for Usability. In Driver Acceptance of New Technology. CRC Press. 2014.

Google Scholar

80. Stier, D., Munro, K., Heid, U. and Minker, W., Towards Situation-Adaptive In-Vehicle Voice Output. Proceedings of the 2nd Conference on Conversational User Interfaces, 1-7, 2020. doi.org/10.1145/3405755.3406127

Google Scholar

81. Strayer, D.L., Is the Technology in Your Car Driving You to Distraction? Policy Insights from the Behavioral and Brain Sciences, 2(1), 157-165, 2015. doi.org/10.1177/2372732215600885

Google Scholar

82. Szameitat, A.J., Rummel, J., Szameitat, D.P. and Sterr, A., Behavioral and emotional consequences of brief delays in human-computer interaction. International Journal of Human-Computer Studies, 67(7), 561-570, 2009. doi.org/10.1016/j.ijhcs.2009.02.004

Google Scholar

83. Talbot, J., An empirical study to assess the impact of mobile touch-screen learning on user information load [Thesis, Anglia Ruskin Research Online (ARRO)]. 2023. https://aru.figshare.com/articles/thesis/An_empirical_study_to_assess_the_impact_of_mobile_touch-screen_learning_on_user_information_load/23762415/1 (retrieved December 3, 2024).

Google Scholar

84. Tan, Z., Dai, N., Su, Y., Zhang, R., Li, Y., Wu, D. and Li, S., Human-Machine Interaction in Intelligent and Connected Vehicles: A Review of Status Quo, Issues, and Opportunities. IEEE Transactions on Intelligent Transportation Systems, 23(9), 13954-13975, 2022. doi.org/10.1109/TITS.2021.3127217

Google Scholar

85. Trivedi, M.M., Driver intent interface and dynamic displays for active safety: An overview of selected recent studies. SICE Annual Conference 2007, 2402-2405, 2007. doi.org/10.1109/SICE.2007.4421390

Google Scholar

86. Tyagi, S. and Szénási, S., Semantic speech analysis using machine learning and deep learning techniques: A comprehensive review. Multimedia Tools and Applications, 83(29), 73427-73456, 2024. doi.org/10.1007/s11042-023-17769-6

Google Scholar

87. Wang, B., Xue, Q., Yang, X., Wan, X., Wang, Y. and Qian, C., Driving Distraction Evaluation Model of In-Vehicle Infotainment Systems Based on Driving Performance and Visual Characteristics. Transportation Research Record, 2678(8), 1088-1103, 2024. doi.org/10.1177/03611981231224750

Google Scholar

88. Waytz, A., Heafner, J. and Epley, N., The mind in the machine: Anthropomorphism increases trust in an autonomous vehicle. Journal of Experimental Social Psychology, 52, 113-117, 2014. doi.org/10.1016/j.jesp.2014.01.005

Google Scholar

89. Xie, J., Wu, R. and Zhang, X., Research on the user experience of smart vehicle interaction in the context of family driving outings travel, 2024 International Symposium on Intelligent Robotics and Systems, 190-193, 2024. https://doi.org/10.1109/ ISoIRS63136.2024.00044

Google Scholar

90. Yan, Y. and Xie, M., Vision-Based Hand Gesture Recognition for Human-Vehicle Interaction. Proceedings of the International Conference on Control, Automation and Computer Vision, 1998.

Google Scholar

91. Yeo, H.S., Lee, B.G. and Lim, H., Hand tracking and gesture recognition system for human-computer interaction using low-cost hardware. Multimedia Tools and Applications, 74(8), 2687-2715, 2015. doi.org/10.1007/s11042-013-1501-1

Google Scholar

92. Yin, H., Li, R. and Chen, Y.V., From hardware to software integration: A comparative study of usability and safety in vehicle interaction modes, Displays, 85, 102869, 2024. https://doi.org/10.1016/j.displa.2024.102869

Google Scholar

93. Zaletelj, J., Perhavc, J. and Tasic, J.F., Vision-based human-computer interface using hand gestures. Eighth International Workshop on Image Analysis for Multimedia Interactive Services (WIAMIS '07), 41-41, 2007. doi.org/10.1109/WIAMIS.2007.89

Google Scholar

94. Zhang, N., Wang, W.X., Huang, S.Y. and Luo, R.M., Mid-air gestures for in-vehicle media player: Elicitation, segmentation, recognition, and eye-tracking testing, SN Applied Sciences, 4(4), 109, 2022. https://doi.org/10.1007/s42452-022-04992-3

Google Scholar

95. Zhang, T., Liu, X., Zeng, W., Tao, D., Li, G. and Qu, X., Input modality matters: A comparison of touch, speech, and gesture based in-vehicle interaction. Applied Ergonomics, 108, 103958, 2023. doi.org/10.1016/j.apergo.2022.103958

Google Scholar

96. Zhong, Q., Guo, G. and Zhi, J., Address Inputting While Driving: A Comparison of Four Alternative Text Input Methods on In-Vehicle Navigation Displays Usability and Driver Distraction, Traffic Injury Prevention, 23(4), 163-168, 2022. https://doi.org/ 10.1080/15389588.2022.2047958

Google Scholar

PIDS App ServiceClick here!